Difference between revisions of "FAQs"

(→There is a firewall between the agent and the server you will find following logs) |

(→Ossec issues in linux agent.) |

||

| (390 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

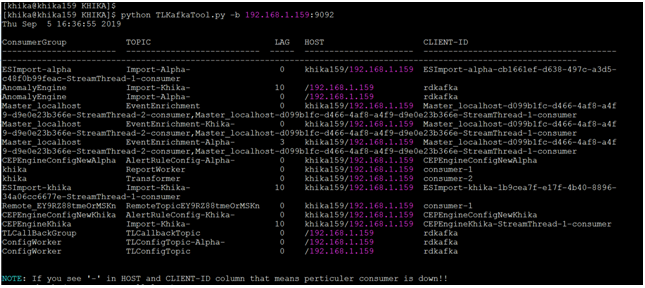

| + | ==How to check status of KHIKA Aggregator i.e. Node ?== | ||

| + | |||

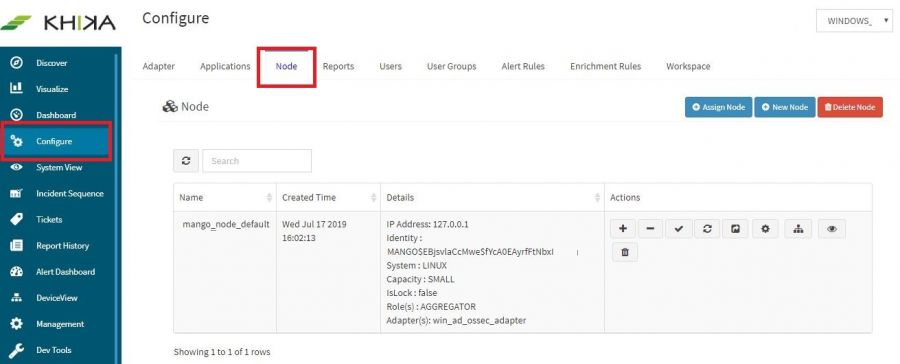

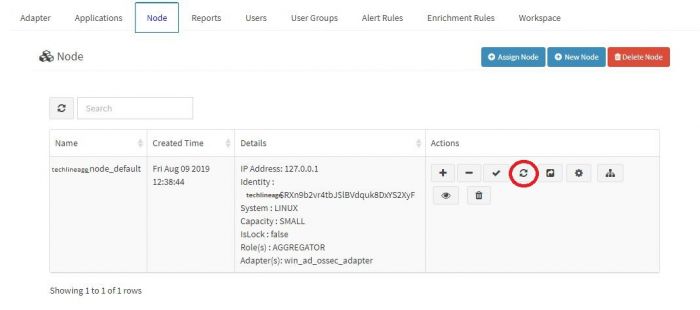

| + | 1. Go to Configure panel on left side menu and then click on Node tab</br> | ||

| + | |||

| + | [[File: check_aggregator status.JPG| 900px]]<br> | ||

| + | |||

| + | |||

| + | 2. Now you see list of KHIKA aggregator (Nodes)</br> | ||

| + | |||

| + | |||

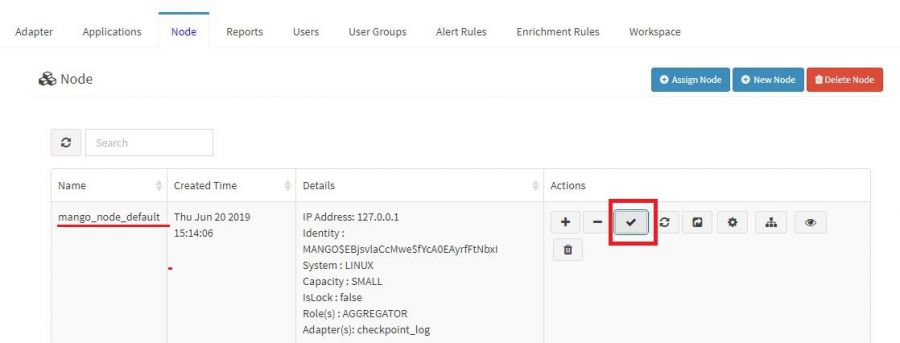

| + | 3. Click on "Check Aggregator Status" button next to node name for which you want to check status.</br> | ||

| + | |||

| + | |||

| + | [[File: aggrgator_status_button.JPG| 900px]]<br> | ||

| + | |||

| + | |||

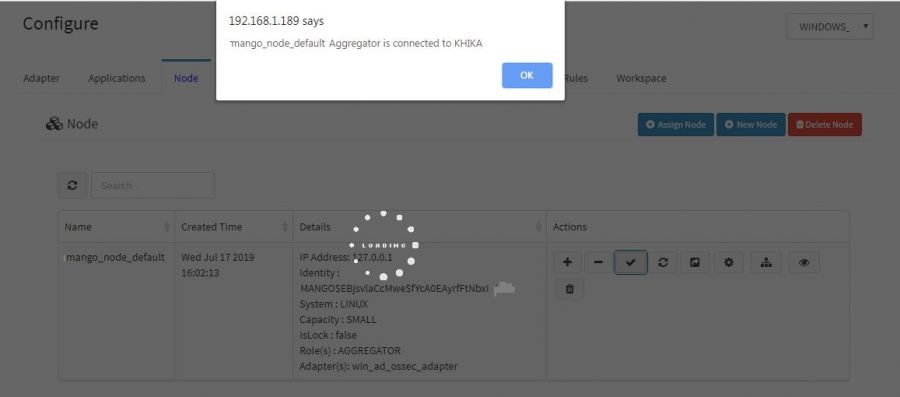

| + | 4. If the KHIKA Aggregator i.e. node is connected to KHIKA Appserver, you will get popup like "Nodename Aggregator is connected to KHIKA". </br> | ||

| + | |||

| + | [[File: aggregator_connected.JPG| 900px]]<br> | ||

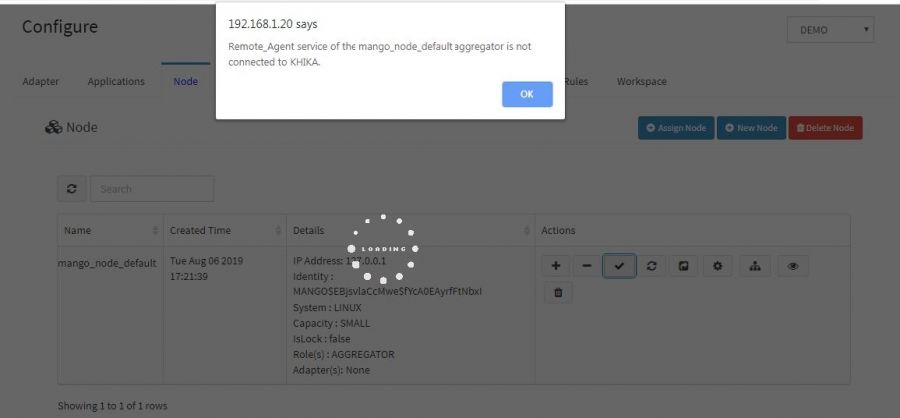

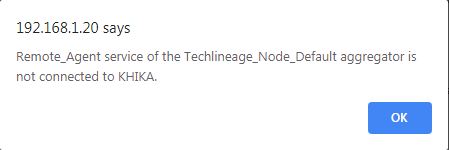

| + | 5. Otherwise the operation will timeout with an error message indicating the node is not connected to KHIKA. </br> | ||

| + | [[File: KHIKA_aggregator_disconnected.JPG| 900px]]<br> | ||

| + | |||

| + | To troubleshoot the connection issue between the KHIKA Application Server and Aggregator, click [[FAQs#Troubleshoot_connection_error_between_KHIKA_appserver_to_Data_Aggregator|here]]<br> | ||

| + | <br> | ||

| + | |||

== How to check if raw syslog data is received in the system? What if it is not received? == | == How to check if raw syslog data is received in the system? What if it is not received? == | ||

| Line 7: | Line 32: | ||

| − | However if raw syslogs are not received from that device, we get an error while adding the device. | + | However if raw syslogs are not received from that device, we get an error while adding the device. |

| + | |||

| + | It is recommended to wait for upto 10 minutes before checking its data. However please note that some devices (e.g. switches & routers) may not log data very frequently and hence log data may not be received by KHIKA in the 10 min period despite all the required configuration being done properly. In such a scenario, it is best to check if any logs are generated on the device's native console in the 10 mins period and then verify whether the same logs are received by KHIKA. | ||

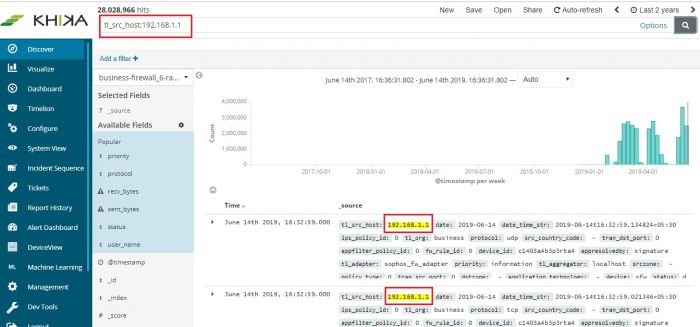

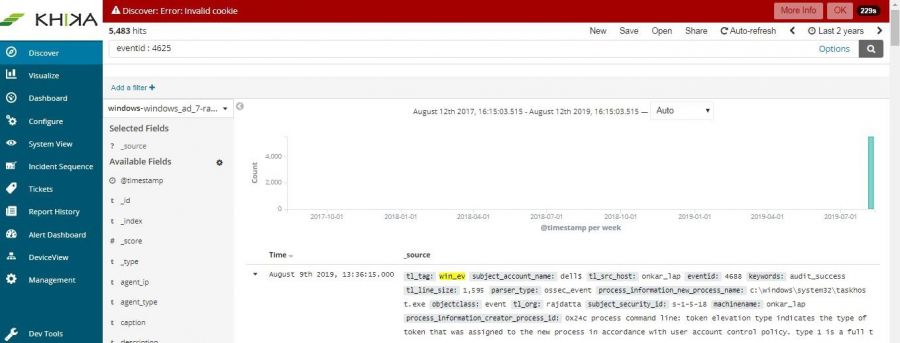

| − | + | To check whether we are receiving this device’s data in KHIKA, go to “Discover” screen from the left menu. Search for the IP address of the device in the search textbox on the top of the screen. | |

| − | In our example from the image, IP address is | + | In our example from the image, IP address is “192.168.1.1”. In the search bar in the Discover screen, just enter “192.168.1.1”. This is for showing up any and all data relevant to the device with this IP. |

| Line 20: | Line 47: | ||

If not, please check section for [[Getting Data into KHIKA#Monitoring in KHIKA using Syslog forwarding|adding data of syslog based devices]]. Both the steps – adding a device in KHIKA as well as forwarding syslogs from that device to KHIKA should be verified again. | If not, please check section for [[Getting Data into KHIKA#Monitoring in KHIKA using Syslog forwarding|adding data of syslog based devices]]. Both the steps – adding a device in KHIKA as well as forwarding syslogs from that device to KHIKA should be verified again. | ||

| + | == Troubleshoot connection error between KHIKA appserver to Data Aggregator.== | ||

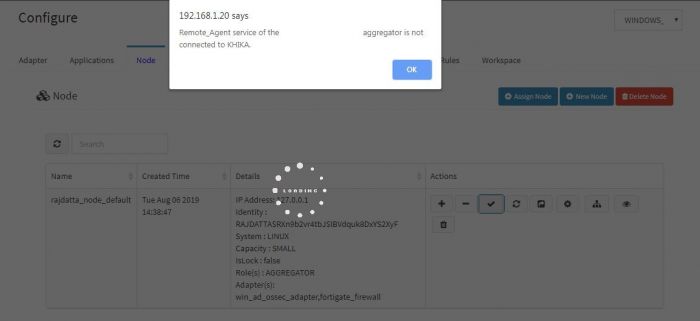

| + | First check data aggregator is connected to khika appserver. Do following steps to check the data aggregator status.<br> | ||

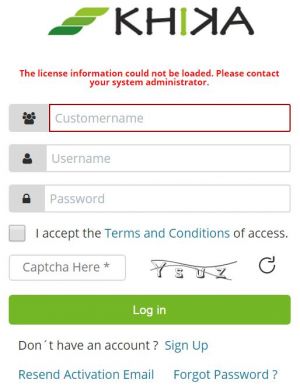

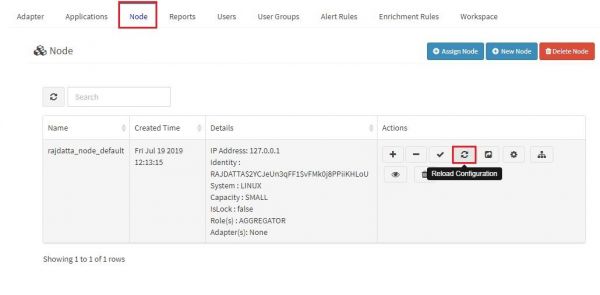

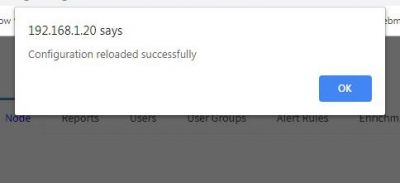

| + | 1.Login to khika UI using apropriate credentials. | ||

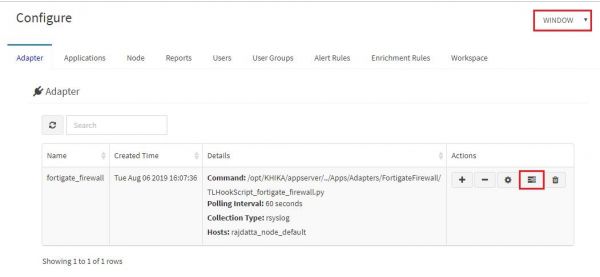

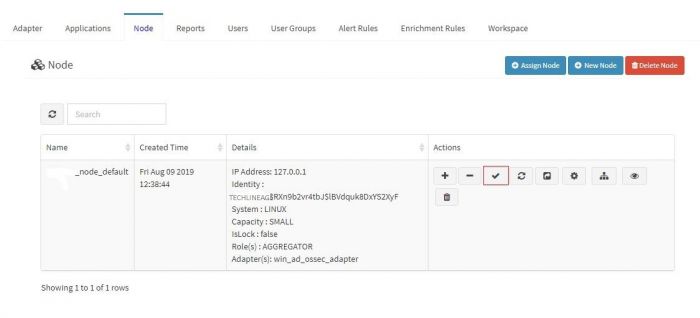

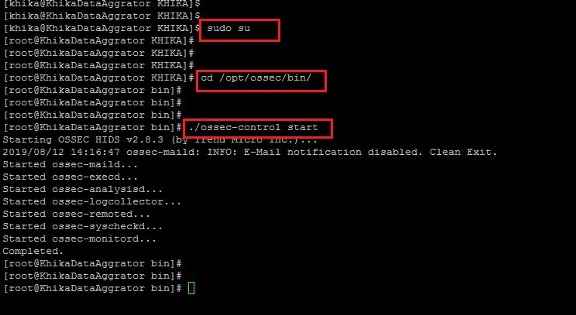

| + | 2. Go to '''"Configure"''' tab.Click on '''"Node"''' tab. | ||

| + | 3. Click on '''"Check Aggregator Status"''' button. | ||

| + | |||

| + | If you get '''"Remote_Agent service of the <node_name> aggregator is not connected to KHIKA"''' message in status popup, it means your aggregator is not connected KHIKA appserver.<br> | ||

| + | [[File: Node_status.JPG | 500px]]<br> | ||

| + | There are some possibilities why data aggregator is not connected to appserver.<br> | ||

| + | 1. [[FAQs#Identity key is mismatched | Identity key is mismatched]]<br> | ||

| + | 2. [[FAQs#Kafka server ip is not set properly | Kafka server ip is not set properly]]<br> | ||

| + | 3. [[FAQs#Date of khika aggregator server is not set properly| Date of khika aggregator server is not set properly]]<br> | ||

| + | 4. [[FAQs#KHIKA Appserver is not reachable|KHIKA Appserver is not reachable]]<br> | ||

| + | 5. [[FAQs#KHIKA appserver is reachable but not connected|KHIKA appserver is reachable but not connected]] | ||

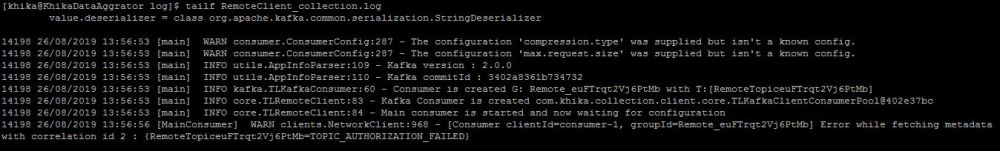

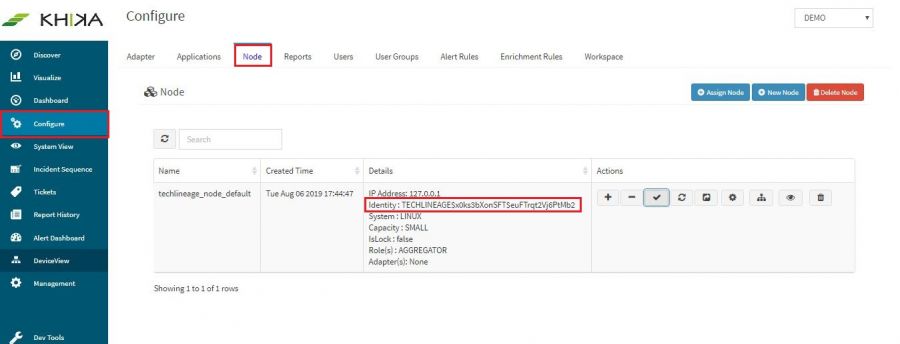

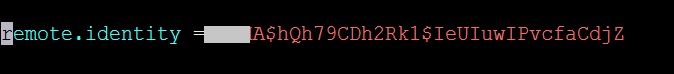

| + | ===Identity key is mismatched=== | ||

| + | This type of error occurs if your identity key from KHIKA UI does not match with the aggregator's identity key.<br> | ||

| + | If error like "TOPIC_AUTHORIZATION_FAILED" is present in '''"RemoteClient_collection.log"''' log file, which is present in '''"/opt/KHIKA/collection/log/"''' path this mean that identity keys are mismatched.Error is shown in the next screenshot.<br> | ||

| + | [[File:Identity_error.JPG| 1000px]]<br> | ||

| + | |||

| + | Do the following steps to matched both identity keys.<br> | ||

| + | 1. Go to /opt/KHIKA directory. | ||

| + | '''cd /opt/KHIKA/''' | ||

| + | 2. run "khika_configure.sh". | ||

| + | '''./khika_configure.sh''' | ||

| + | 3. Go to KHIKA UI and login into KHIKA. | ||

| + | 4. Go to '''"Configure"''' tab and then click on '''"Node"''' tab. | ||

| + | 5. Copy Identity from UI | ||

| + | [[File: Identity.JPG | 900px]] | ||

| + | 6. when khika_configure script is running enter copied identity key in '''"Specify the KHIKA Identity:"''' section. | ||

| + | 7. Press Enter.'''"KHIKA Data Aggregator service will start now. Please wait for some time"''' message is displayed. | ||

| + | [[File:Run_configure_script.JPG | 900px]] | ||

| + | |||

| + | After some time you got '''"Khika Configuration is done"''' message. Then check process is running or not using the following command. | ||

| + | '''ps -ef | grep RemoteClient''' | ||

| + | |||

| + | and check the status of Data Aggregator from UI. to check the Status of Data Aggregator click [[FAQs#How to check status of KHIKA Aggregator i.e. Node ?|here]] | ||

| + | |||

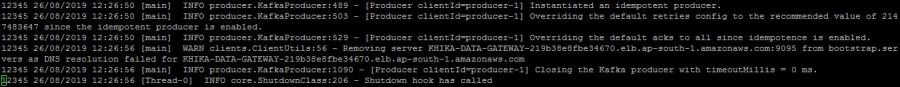

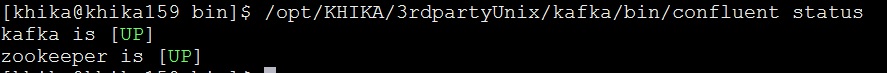

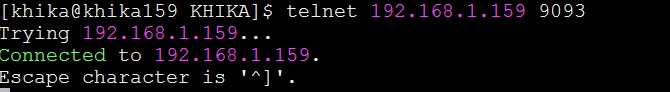

| + | ===Kafka server ip is not set properly=== | ||

| + | This type of error is occurred when your kafka server ip of khika appserver is not given to data aggregator.<br> | ||

| + | If '''"DNS resolution failed for <Server_ip>"''' and '''"Closing the kafka producer with timeoutMillis = 0ms"''' and '''"Shutdown hook has called"''' type of messages are present in "RemoteClient_collection.log" log file, which is present in "/opt/KHIKA/collection/log/" path this mean your data aggregator is not connected to appserver because of kafka ip is incorrect.<br> | ||

| + | Error is shown in the next screenshot.<br> | ||

| + | [[File: Kafka_server_ip.JPG| 900px]]<br> | ||

| + | |||

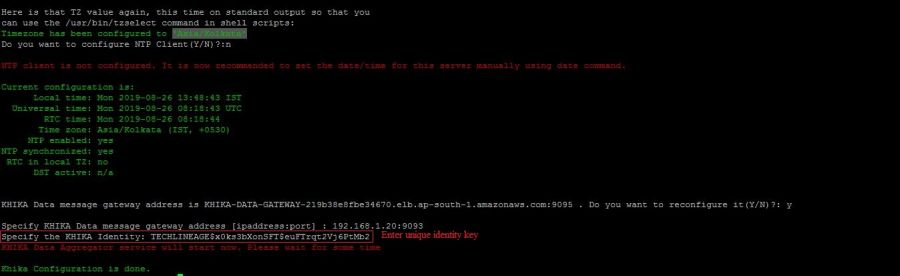

| + | Do the following steps to solve the issue.<br> | ||

| + | 1. Go to /opt/KHIKA directory. | ||

| + | '''cd /opt/KHIKA/''' | ||

| + | 2. run "khika_configure.sh". | ||

| + | '''./khika_configure.sh''' | ||

| + | 3. After NTP configuration it will ask the setup for '''Gateway address'''.Click '''"Y"''' to setup gateway. | ||

| + | 4. Enter the IP_addtess and port '''"Specify KHIKA Data message gateway address [ipaddress:port] : "''' section. | ||

| + | Note: use 9093 port for SASL authentication | ||

| + | 5. Go to KHIKA UI and login into KHIKA. | ||

| + | 6. Go to '''"Configure"''' tab and then click on '''"Node"''' tab. | ||

| + | 7. Copy Identity from UI | ||

| + | 8. when khika_configure script is running enter copied identity key in '''"Specify the KHIKA Identity:"''' section. | ||

| + | 9. Press Enter.'''"KHIKA Data Aggregator service will start now. Please wait for some time"''' message displayed. | ||

| + | |||

| + | After some time you got "Khika Configuration is done" message. Then check process is running or not using the following command. | ||

| + | '''ps -ef | grep RemoteClient''' | ||

| + | |||

| + | and check the status of Data Aggregator from UI. to check the Status of Data Aggregator click [[FAQs#How to check status of KHIKA Aggregator i.e. Node ?|here]] | ||

| + | |||

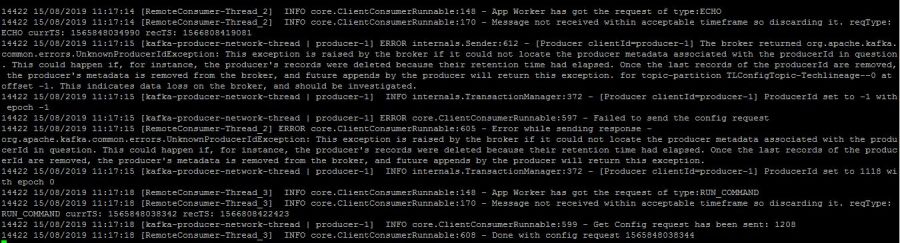

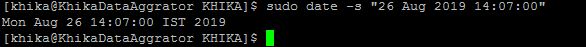

| + | ===Date of khika aggregator server is not set properly=== | ||

| + | This type of error is occurred if date and time is not set properly on your data aggregator server.<br> | ||

| + | check if the following type of error is occurred in '''"RemoteClient_collection.log"''' log file, which is present in '''"/opt/KHIKA/collection/log/"''' path this mean Date and Time is not set properly.<br> | ||

| + | [[File:Time_change_error.JPG | 900px]]<br> | ||

| + | To solve this issue do following steps<br> | ||

| + | 1. Stop all process using following command.<br> | ||

| + | '''./stop.sh''' | ||

| + | 2. Set date and time<br> | ||

| + | Example: '''sudo date -s "26 Aug 2019 13:14:00"'''<br> | ||

| + | [[File:Set_date.JPG | 900px]]<br> | ||

| + | 3. Start all process using following command<br> | ||

| + | '''./start.sh''' | ||

| + | then check Aggregator status from [[FAQs#How to check status of KHIKA Aggregator i.e. Node ?|here]] | ||

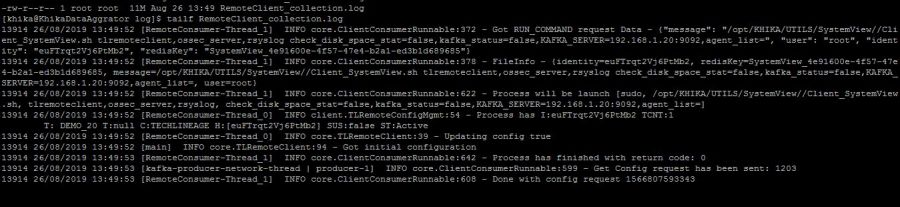

| − | == | + | After Solve above problems go to "/opt/KHIKA/collection/log" directory and check '''"RemoteClient_collection.log"''' <br> |

| + | See the below screenshot to check the status of data aggregator from backend below type of messages are displayed in log file<br> | ||

| + | [[FIle:After_setup.JPG | 900px]] | ||

| + | |||

| + | ===KHIKA Appserver is not reachable=== | ||

| + | First check,IF KHIKA Appserver is reachable from your Data aggregator, use the following command to check connection | ||

| + | '''ping 192.168.1.20''' | ||

| + | If the appserver is unreachable then contact your network team and try to connect.<br> | ||

| + | There are some possibilities regarding network<br> | ||

| + | 1. Data aggregator has no access to internet.<br> | ||

| + | 2. Aggregator and appserver are not in the same network.<br> | ||

| + | 3. If the subnet is different then make sure you have a firewall rule that will allow the connection between Data Aggregator and Appserver.<br> | ||

| + | 4. Firewall is running on Data Aggregator.<br> | ||

| + | '''firewalld''' service should not be running/active on Data Aggregator. | ||

| + | check firewalld service is running on data aggregator using the following command<br> | ||

| + | '''systemctl status firewalld.service'''<br> | ||

| + | If firewalld service is running/active use the following command to stop firewalld service.<br> | ||

| + | '''systemctl status firewalld.service'''<br> | ||

| + | '''systemctl disable firewalld.service'''<br> | ||

| + | |||

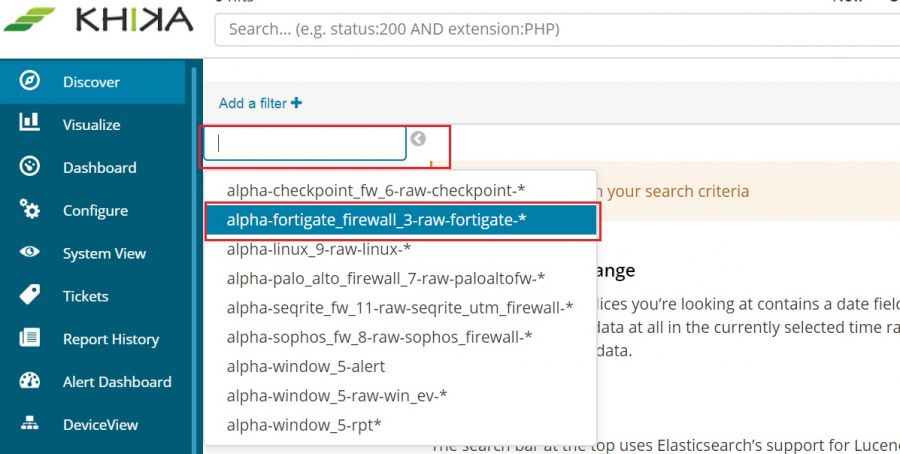

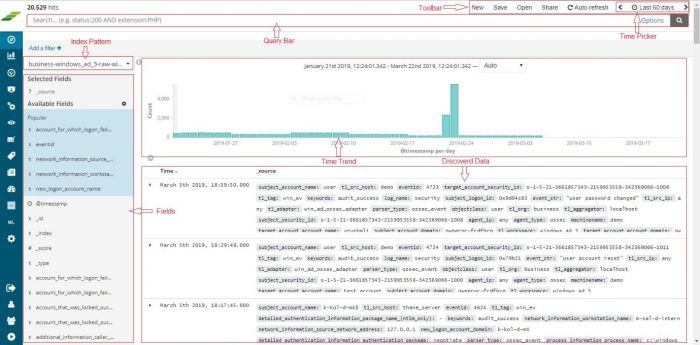

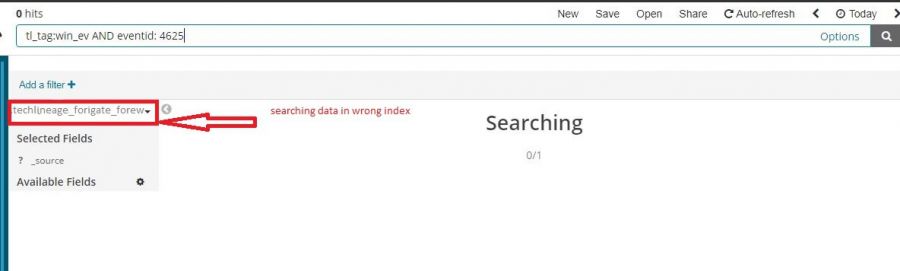

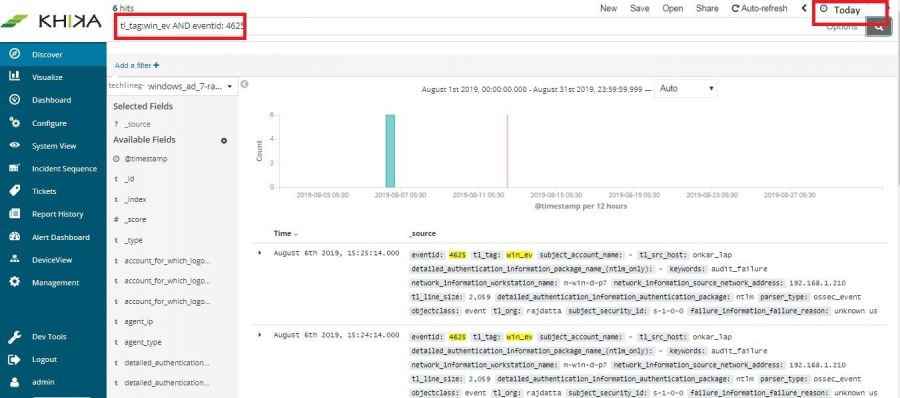

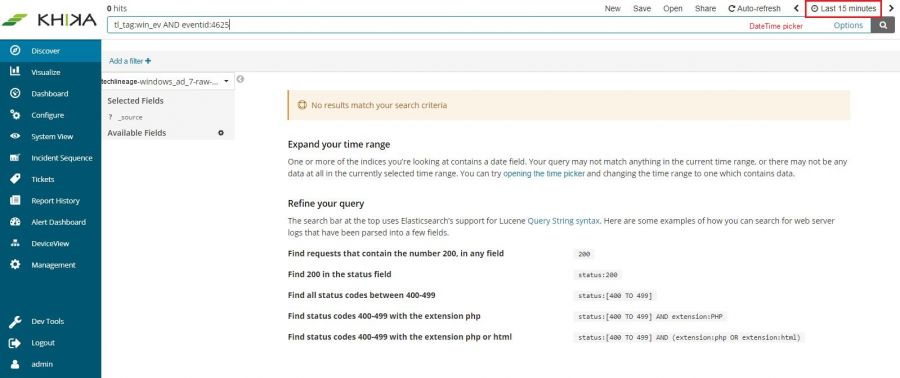

| + | == How to see raw log data on Discover Screen? == | ||

On the Discover screen, you have to choose 2 things to bring up your data : | On the Discover screen, you have to choose 2 things to bring up your data : | ||

| Line 31: | Line 153: | ||

Then select the time duration of data you want to see from the time picker functionality on top right. Selecting time window is explained [[Discover or Search Data in KHIKA#Setting the Time Filter|here]] | Then select the time duration of data you want to see from the time picker functionality on top right. Selecting time window is explained [[Discover or Search Data in KHIKA#Setting the Time Filter|here]] | ||

| − | If time duration is selected too large, it may severely affect the performance of | + | If time duration is selected too large, it may severely affect the performance of KHIKA Search. We recommend not selecting the data beyond Last 24 hours. Your searches may time out if you select large Time Ranges. |

Reduce your time window and try again. | Reduce your time window and try again. | ||

It is advised to keep a lesser time window. However on the contrary, if there is no / very less data in the picked time window, you might want to increase your time window from the time picker and load the screen again. | It is advised to keep a lesser time window. However on the contrary, if there is no / very less data in the picked time window, you might want to increase your time window from the time picker and load the screen again. | ||

| − | |||

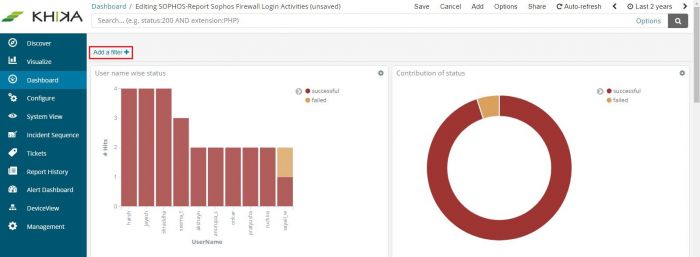

== How to select data related to a particular device on your Dashboard? == | == How to select data related to a particular device on your Dashboard? == | ||

| − | Every dashboard in KHIKA will have multiple devices | + | Every dashboard in KHIKA will have data for multiple devices in it. For example, a Linux logon dashboard has information about all the Linux devices in the "LINUX" workspace (the name of the relevant workspace appears as prefix before the name of the dashboard). |

| − | To see data on the dashboard for only one | + | To see data on the dashboard for only one Linux device, you have to select the required device on your Dashboard. There are couple of ways to select an APV on your dashboard : |

*Add a filter | *Add a filter | ||

*Enter Search query | *Enter Search query | ||

| Line 49: | Line 170: | ||

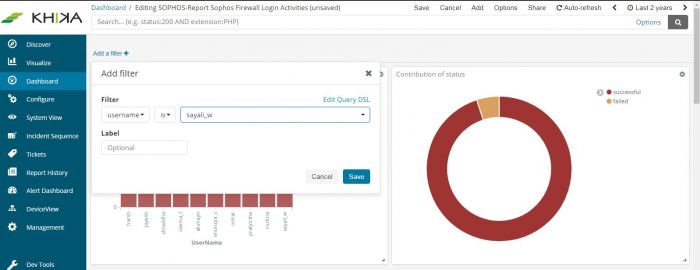

On each dashboard, there is an option, “Add a filter”. Click on the “+” sign to add a new one. Use the simple drop downs in combination, to create your logical filter query. | On each dashboard, there is an option, “Add a filter”. Click on the “+” sign to add a new one. Use the simple drop downs in combination, to create your logical filter query. | ||

| − | + | [[File:FAQ_1.JPG| 700px]] | |

| − | |||

| − | + | [[File:FAQ_2.JPG | 700px]] | |

| − | The first dropdown is the list of fields from our data. We have selected | + | The first dropdown is the list of fields from our data. We have selected '''“username”''' here. The second dropdown is a logical connector. We have selected “is” in this dropdown. The third dropdown has the values of this field. We have selected one device say '''sayali_w''' here. So now, our filter query is: '''“username is sayali_w”''' |

Click on Save at the bottom of this filter pop up. | Click on Save at the bottom of this filter pop up. | ||

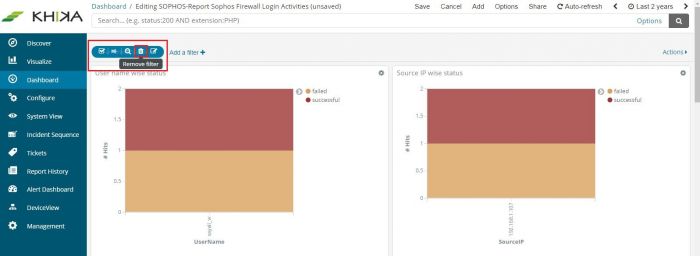

| − | Your Dashboard now shows data for only the selected device in all the pie charts, bar graphs and summary table – everywhere in the dashboard. | + | Your Dashboard now shows data for only the selected device in all the pie charts, bar graphs, and summary table – everywhere in the dashboard. |

The applied filter is seen on top. | The applied filter is seen on top. | ||

| − | + | [[File: FAQ_3.JPG| 700px]] | |

| Line 68: | Line 188: | ||

| − | + | [[File: FAQ_4.JPG | 700px]] | |

| − | Please Note : If this is just a single search event, | + | Please Note: If this is just a single search event, do not follow further steps. If you want to save this search for this particular device with the Dashboard, follow steps given further to save the search. |

Click on Edit link on the top right of the Dashboard – Save link appears. Click on Save to save this search query with the dashboard. | Click on Edit link on the top right of the Dashboard – Save link appears. Click on Save to save this search query with the dashboard. | ||

| − | + | [[File:FAQ_5.JPG| 700px]] | |

| Line 81: | Line 201: | ||

=== Steps to Search and Save === | === Steps to Search and Save === | ||

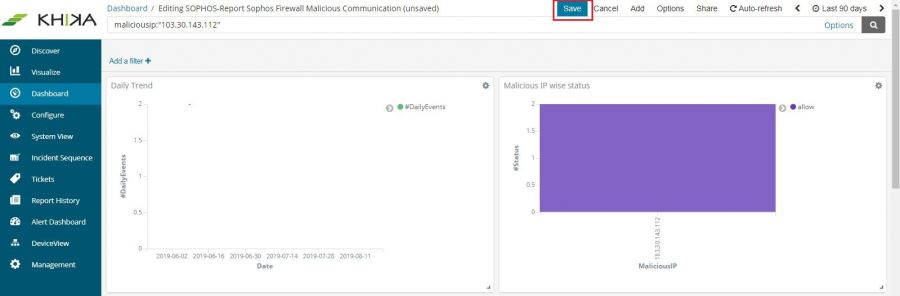

| − | On the top of the Dashboard, there is a text box for search. Enter your device search query for a particular device in this box. | + | On the top of the Dashboard, there is a text box for search. Enter your device search query for a particular device in this box. <br> |

| − | + | [[File:Search11.JPG|900px]] <br> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

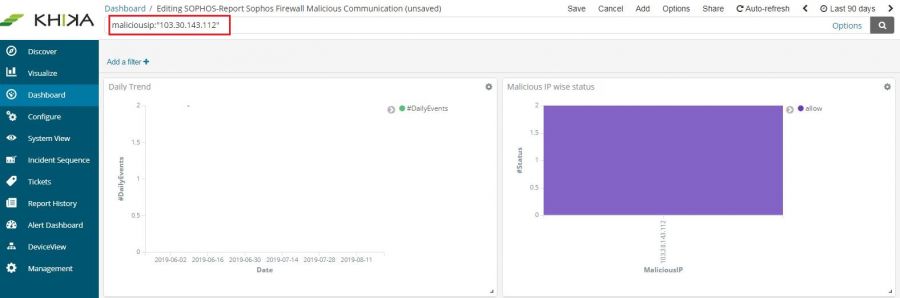

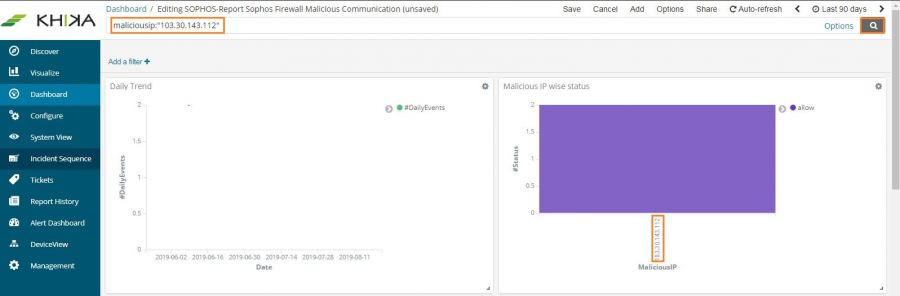

| + | We have entered maliciousip:”103.30.143.112” . This is the syntax for maliciousip equals to 103.30.143.112. Click on the rightmost search button in that textbox to search for this particular device on the dashboard. | ||

| + | All the elements on the Dashboard shall now reflect data for the selected device. <br> | ||

| + | [[File:Search12.JPG|900px]] <br> | ||

Please Note : If this is just a single search event, donot follow further steps. If you want to save this search for this particular device with the Dashboard, follow steps given further to save the search. | Please Note : If this is just a single search event, donot follow further steps. If you want to save this search for this particular device with the Dashboard, follow steps given further to save the search. | ||

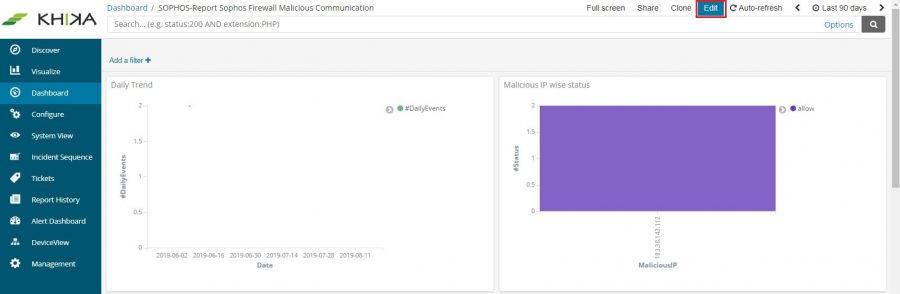

| − | Click on Edit link above the search textbox – Save link appears. Click on Save to save this search query with the dashboard. | + | Click on Edit link above the search textbox – Save link appears. Click on Save to save this search query with the dashboard. <br> |

| + | [[File:Search13.JPG|900px]] <br> | ||

| − | |||

| + | [[File:Search14.JPG|900px]] <br> | ||

This shall stay with the Dashboard and will be seen every time we open the Dashboard. | This shall stay with the Dashboard and will be seen every time we open the Dashboard. | ||

| − | To remove the search, select the search query which you can see in that textbox, remove / delete it. Click on Edit and Save the Dashboard again. It changes back to its previous state. | + | To remove the search, select the search query which you can see in that textbox, remove / delete it. Click on Edit and Save the Dashboard again. It changes back to its previous state. |

| − | |||

| − | |||

| − | |||

| − | |||

== How do I estimate my per day data? == | == How do I estimate my per day data? == | ||

| Line 119: | Line 230: | ||

We need to make SMTP settings in KHIKA so that KHIKA alerts and reports can be sent to relevant stakeholders as emails. | We need to make SMTP settings in KHIKA so that KHIKA alerts and reports can be sent to relevant stakeholders as emails. | ||

| − | Please refer the dedicated section for [[SMTP | + | Please refer the dedicated section for [[SMTP Server Settings]] |

| − | + | == Integrating log data from a device via Syslog == | |

| − | == | ||

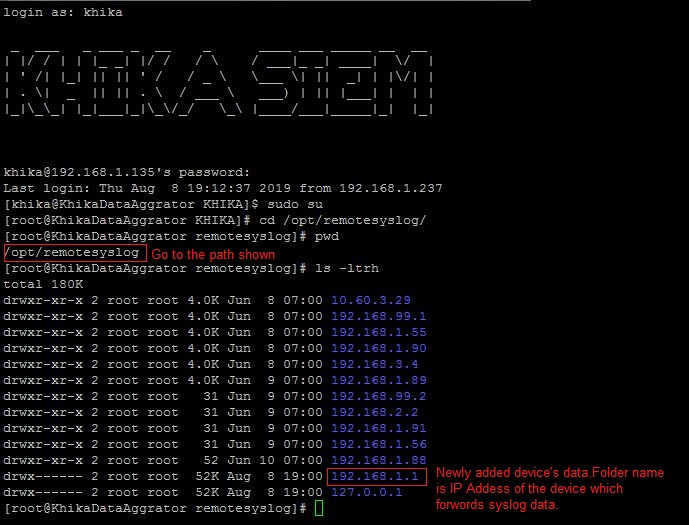

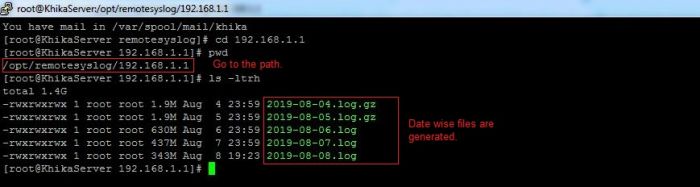

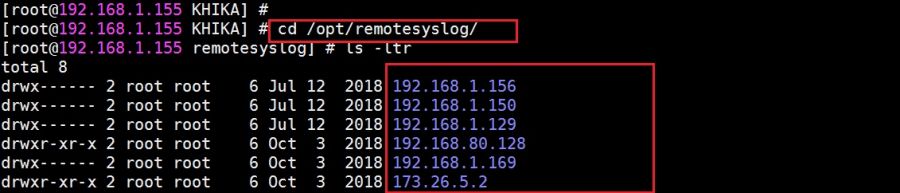

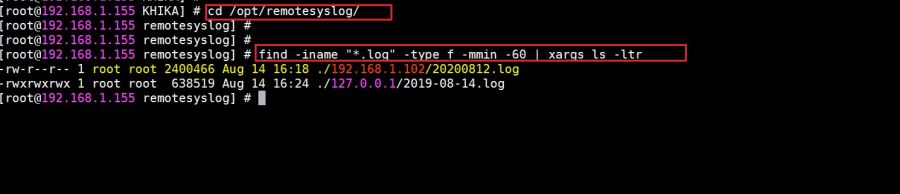

Syslog service is pre-configured on your KHIKA aggregator server (on UDP port 514). Syslogs are stored in /opt/remotesylog directory with IP address of the sending device as directory name for each device sending data. This way, data of each device is stored in a distinct directory and files. For example, if you are sending syslogs from your firewall which as IP of 192.168.1.1, you will see a directory with the name /opt/remotesylog/192.168.1.1 on KHIKA Data Aggregator. The files will be created in this directory with the date and time stamp <example : 2019-08-08.log>. If you do a "'''tail -f'''" on the latest file, you will see live logs coming in. | Syslog service is pre-configured on your KHIKA aggregator server (on UDP port 514). Syslogs are stored in /opt/remotesylog directory with IP address of the sending device as directory name for each device sending data. This way, data of each device is stored in a distinct directory and files. For example, if you are sending syslogs from your firewall which as IP of 192.168.1.1, you will see a directory with the name /opt/remotesylog/192.168.1.1 on KHIKA Data Aggregator. The files will be created in this directory with the date and time stamp <example : 2019-08-08.log>. If you do a "'''tail -f'''" on the latest file, you will see live logs coming in. | ||

| Line 147: | Line 257: | ||

5. cd to /opt/remotesylog and do "'''ls -ltr'''" here. If you see the directory with the name of the ip of the device sending the data, you have started receiving the data in syslogs.<br> | 5. cd to /opt/remotesylog and do "'''ls -ltr'''" here. If you see the directory with the name of the ip of the device sending the data, you have started receiving the data in syslogs.<br> | ||

| − | ===Data is not | + | ===Data is not received on KHIKA Data Aggregator=== |

| − | # | + | #Please wait for some time. |

| − | #Some devices such as switches, routers, etc | + | #Some devices such as switches, routers, etc doesn't generate too many syslogs. |

#It depends on the activity on the device. Try doing some activities such as login and issue some commands etc. The intention is to generate some syslogs. | #It depends on the activity on the device. Try doing some activities such as login and issue some commands etc. The intention is to generate some syslogs. | ||

#Check if logs are generating and being received on KHIKA Data Aggregator in the directory "/opt/remotesylog/ip_of_device". Do <b>ls -ltrh</b> | #Check if logs are generating and being received on KHIKA Data Aggregator in the directory "/opt/remotesylog/ip_of_device". Do <b>ls -ltrh</b> | ||

| Line 170: | Line 280: | ||

'''systemctl syslog-ng start''' | '''systemctl syslog-ng start''' | ||

Make sure you are receiving the logs in the directory /opt/remotesylog/ip_of_device | Make sure you are receiving the logs in the directory /opt/remotesylog/ip_of_device | ||

| − | Go to [[ | + | Go to [[Dealing with Syslog Device in KHIKA#Started Receiving the logs on Syslog server|Started Receiving the logs]] only after you start receiving the logs. |

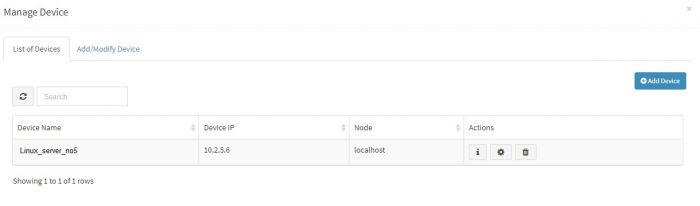

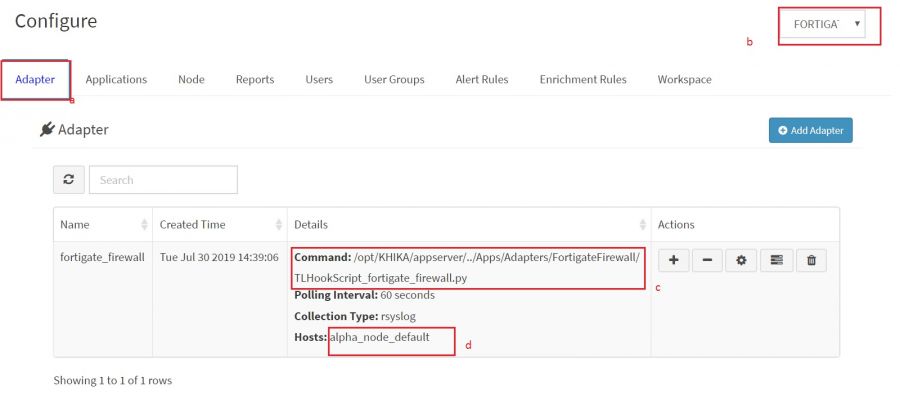

| − | === | + | === Received log data on KHIKA Data Aggregator=== |

Now you need to add a device from KHIKA GUI.<br> | Now you need to add a device from KHIKA GUI.<br> | ||

If the similar device of a data source has already been added to KHIKA | If the similar device of a data source has already been added to KHIKA | ||

| − | #Add this device to the same Adapter using following steps explained [[Getting Data into KHIKA#Adding device | + | #Add this device to the same Adapter using following steps explained [[Getting Data into KHIKA#Adding the device in the Adaptor|here]]. |

| − | #Else, check if App for this device is available with KHIKA. If the App is available, [[Load KHIKA App|load the App]] and then Add device to the adapter using the steps explained [[Getting Data into KHIKA | + | #Else, check if App for this device is available with KHIKA. If the App is available, [[Load KHIKA App|load the App]] and then Add device to the adapter using the steps explained [[Getting Data into KHIKA#Adding the device in the Adaptor|here]]. |

#Else, develop a new Adapter (and perhaps a complete App) for this data source. Please read section on [[Write Your Own Adapter|how to write your own adapter on Wiki]], after writing your own adapter, testing it, you can [[Working with KHIKA Adapters|configure the adapter]] and then start consuming data into KHIKA. Explore the data in KHIKA using [[Discover or Search Data in KHIKA|KHIKA search interface]] | #Else, develop a new Adapter (and perhaps a complete App) for this data source. Please read section on [[Write Your Own Adapter|how to write your own adapter on Wiki]], after writing your own adapter, testing it, you can [[Working with KHIKA Adapters|configure the adapter]] and then start consuming data into KHIKA. Explore the data in KHIKA using [[Discover or Search Data in KHIKA|KHIKA search interface]] | ||

| + | ==Integrating log data from device via Ossec == | ||

| + | The KHIKA Data Aggregator embeds an OSSEC Server which receives logs data from ossec agents installed on server devices. | ||

| − | |||

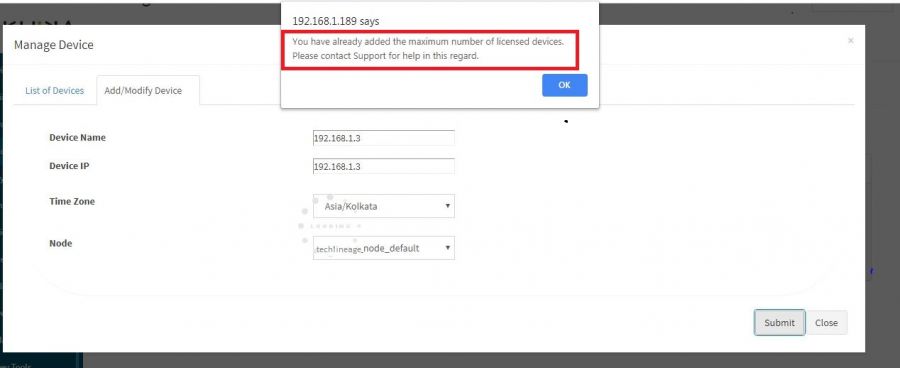

=== Failing to add ossec based device=== | === Failing to add ossec based device=== | ||

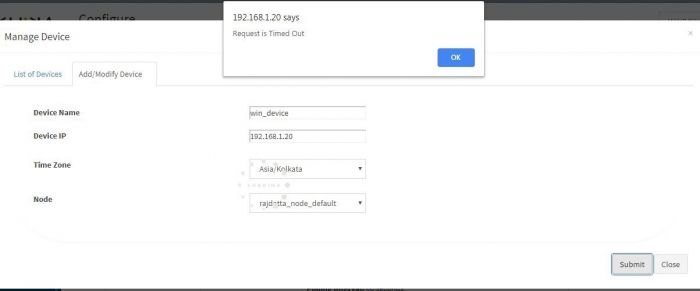

1. Time out Error | 1. Time out Error | ||

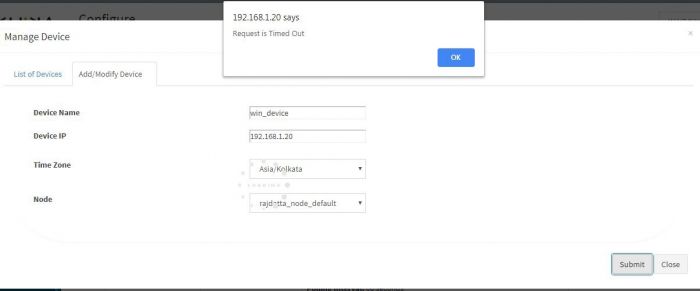

| − | Check if you are getting following Error while adding the device. | + | Check if you are getting following Error while adding the device. <br> |

[[File: Ossec_device1.jpg|700px]]<br> | [[File: Ossec_device1.jpg|700px]]<br> | ||

<br> | <br> | ||

| − | This means your aggregator | + | This means your aggregator may not be connected to KHIKA Application Server.<br> |

| − | + | Please proceed to check if the aggregator is connected to KHIKA server. | |

1. Go to node tab in KHIKA GUI. | 1. Go to node tab in KHIKA GUI. | ||

2. Click on Check Aggregator Status button as shown in the screenshot below<br> | 2. Click on Check Aggregator Status button as shown in the screenshot below<br> | ||

[[File:Ossec_device2.jpg|700px]]<br> | [[File:Ossec_device2.jpg|700px]]<br> | ||

| − | 3. If it shows that the aggregator is not connected to KHIKA Server, it means that | + | 3. If it shows that the aggregator is not connected to KHIKA Server, it means that your aggregator is not connected to KHIKA AppServer.Click [[FAQs#How to check status of KHIKA Aggregator i.e. Node ?|here]] to check Data Aggregator status |

2. Device is already present<br> | 2. Device is already present<br> | ||

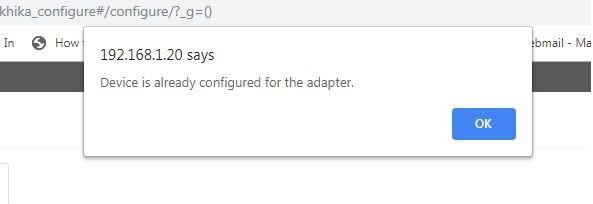

| − | Check if you are getting the following message while adding the device | + | Check if you are getting the following message while adding the device <br> |

[[File:Ossec_device3.jpg|700px]]<br> | [[File:Ossec_device3.jpg|700px]]<br> | ||

We cannot add the same device twice, Check if you already have added the device in the device list. | We cannot add the same device twice, Check if you already have added the device in the device list. | ||

| − | ===Data is not | + | ===Device Data is not visible in KHIKA=== |

Check your agent status to see if it is connected to OSSEC Server(KHIKA Aggregator).<br> | Check your agent status to see if it is connected to OSSEC Server(KHIKA Aggregator).<br> | ||

To find the list of ossec agents along with its status click[[FAQs#How to Find list of ossec agents along with it's status on command line| here]]<br> | To find the list of ossec agents along with its status click[[FAQs#How to Find list of ossec agents along with it's status on command line| here]]<br> | ||

| Line 204: | Line 315: | ||

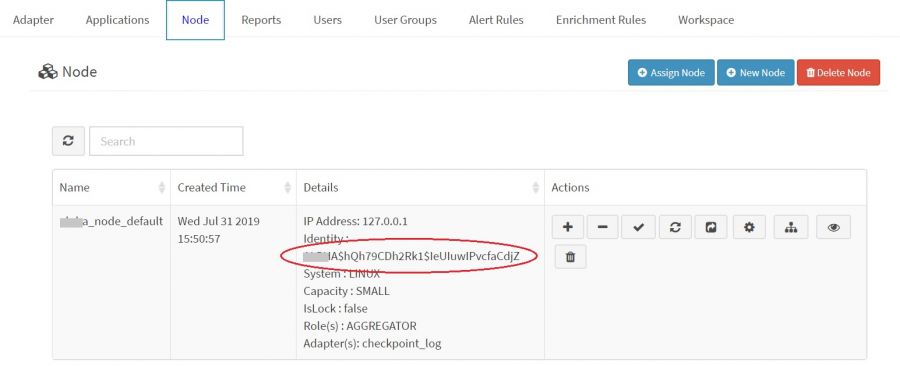

1. Go to the workspace in which the device is added.<br> | 1. Go to the workspace in which the device is added.<br> | ||

| − | 2. Check in which workspace the device is added, refer the following screenshot<br> | + | 2. Check-in which workspace the device is added, refer the following screenshot<br> |

[[File:Ossec_device5.jpg | 600px]]<br> | [[File:Ossec_device5.jpg | 600px]]<br> | ||

3. We may need to select appropriate index pattern in which data can be searched for requested server.<br> | 3. We may need to select appropriate index pattern in which data can be searched for requested server.<br> | ||

4. Check Data is available on Discover page<br> | 4. Check Data is available on Discover page<br> | ||

[[File:Ossec23.jpg|700px]]<br> | [[File:Ossec23.jpg|700px]]<br> | ||

| − | 5. In the search bar we should include the server name to check if related logs are coming or not. | + | 5. In the search bar, we should include the server name to check if related logs are coming or not. |

Examples: | Examples: | ||

| − | 1. If customer name is XYZ and if the server is in windows_servers workspace then we must select <XYZ>_< | + | 1. If customer name is XYZ and if the server is in windows_servers workspace then we must select <XYZ>_<<WORKSPACE_NAME>_<ID>>_raw_<tl_tag> index pattern.<br> |

2. tl_src_host : “<servername>” | 2. tl_src_host : “<servername>” | ||

| − | + | 6. If you don’t find data from this device using above steps, you need to check if the device is actually generating any log data at all or not. In case of a Windows server, you will need to check if events are getting logged in security or system event log via the event viewer. In case of a Linux server, you should check if any messages are getting logged in syslog files. | |

| − | 6. If you don’t find data from this device using above steps, | ||

===Ossec Agent And Ossec Server Connection issue=== | ===Ossec Agent And Ossec Server Connection issue=== | ||

====Ossec Server not running==== | ====Ossec Server not running==== | ||

There could be a problem where ossec server is stopped and is not running.<br> | There could be a problem where ossec server is stopped and is not running.<br> | ||

| − | Go to node tab and click on Reload Configuration button to restart the ossec server.<br> | + | Go to node tab and click on Reload Configuration button to restart the ossec server.To check how to restart ossec server click [[FAQs#How to Restart OSSEC Server|here]]<br> |

[[File:Ossec_device4.jpg| 700px]]<br> | [[File:Ossec_device4.jpg| 700px]]<br> | ||

| − | ==== | + | If there is any error in restarting ossec server, the KHIKA aggregator may not be connected to KHIKA Appserver. Please click [[FAQs#How to check status of KHIKA Aggregator i.e. Node ?|here]] to check status of KHIKA Aggregator (i.e. Node).<br> |

| − | If you have the following message on the [[FAQs#How to check logs in Linux Ossec Agent| Linux]] agent log or [[FAQs#How to check logs in Windows Ossec Agent| Windows]] agent log. | + | <br> |

| + | |||

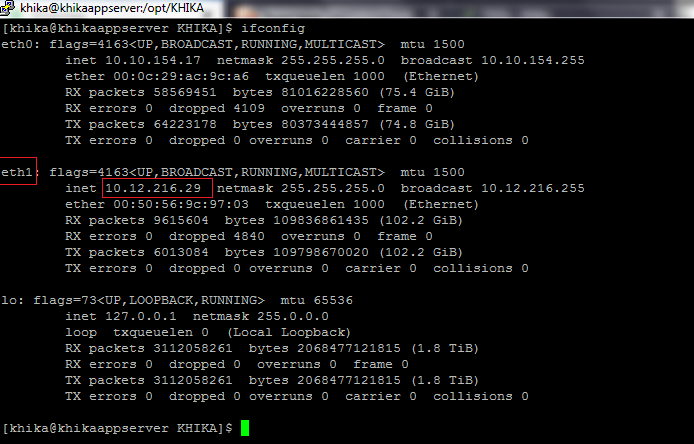

| + | ====Firewall between the agent and the server ==== | ||

| + | If there is a firewall between the agent and server blocking the communication, you have the following message on the [[FAQs#How to check logs in Linux Ossec Agent| Linux]] agent log or [[FAQs#How to check logs in Windows Ossec Agent| Windows]] agent log. | ||

'''Waiting for server reply (not started)''' | '''Waiting for server reply (not started)''' | ||

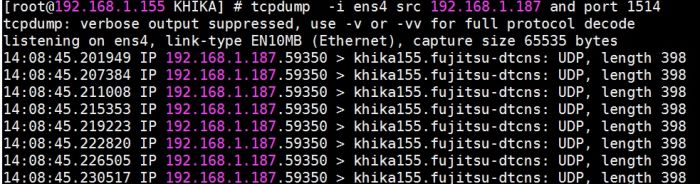

| Line 233: | Line 346: | ||

[[File:Ossec_device6.jpg| 700px]] | [[File:Ossec_device6.jpg| 700px]] | ||

| − | ====Wrong authentication keys configured | + | To identify the correct interface to use for tcpdump, use the command 'ifconfig' and then choose the interface that corresponds to the ip address on which ossec server is listening. e.g. If ip address is '''10.12.216.29''', the interface would be '''eth1'''. please refer the screenshot. <br> |

| + | |||

| + | [[File:ip_address_list.png|700px]] | ||

| + | <br> | ||

| + | |||

| + | ====Wrong authentication keys configured ==== | ||

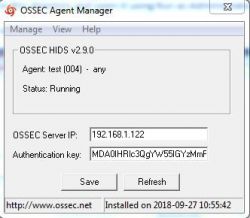

If that’s the case, you would be getting logs similar to '''Waiting for server reply (not started)''' on the agent side and '''Incorrectly formated message from 'xxx.xxx.xxx.xxx'.''' on the server-side.<br> | If that’s the case, you would be getting logs similar to '''Waiting for server reply (not started)''' on the agent side and '''Incorrectly formated message from 'xxx.xxx.xxx.xxx'.''' on the server-side.<br> | ||

| − | 1. [[FAQs#How to check logs in Windows Ossec Agent | + | 1. [[FAQs#How to check logs in Windows Ossec Agent|Check Windows Ossec agent logs]]<br> |

| − | 2. [[FAQs#How to check logs in Linux Ossec Agent | + | 2. [[FAQs#How to check logs in Linux Ossec Agent|Check Linux Ossec agent logs]]<br> |

| − | 3. [[FAQs# | + | 3. [[FAQs#How to check OSSEC Server logs|Check Ossec server log]]<br> |

'''Resolution :''' | '''Resolution :''' | ||

| Line 243: | Line 361: | ||

1. [[Getting Data into KHIKA#Insert unique OSSEC key in Windows OSSEC Agent|Importing the ossec key to Windows ossec agent]]<br> | 1. [[Getting Data into KHIKA#Insert unique OSSEC key in Windows OSSEC Agent|Importing the ossec key to Windows ossec agent]]<br> | ||

2. [[Getting Data into KHIKA#Insert unique OSSEC key in Linux OSSEC Agent|Importing the ossec key to Linux ossec agent]]<br> | 2. [[Getting Data into KHIKA#Insert unique OSSEC key in Linux OSSEC Agent|Importing the ossec key to Linux ossec agent]]<br> | ||

| + | |||

| + | ====Ossec agent was already installed ==== | ||

| + | |||

| + | Before installing ossec agent please check, is ossec agent is already installed. If ossec agent is already installed and we try to install it overrides the existing configuration which may lead to a connection issue, so if ossec agent already installed go to the install path and please follow below steps.</br> | ||

| + | |||

| + | |||

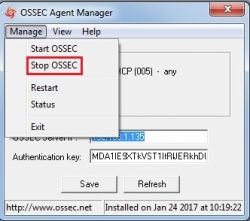

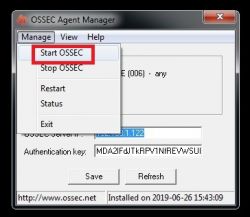

| + | 1. For Windows </br> | ||

| + | a. Stop Ossec Agent | ||

| + | [[File:Windows_device_11.jpg | 250px]]<br> | ||

| + | b. Remove/ rename ossec folder agent_folder</br> | ||

| + | c. [[FAQs#How to Reinstall OSSEC Agent for Windows|Reinstall the osssec agent]]</br> | ||

| + | d. Start Osssec agent</br> | ||

| + | [[File:Windows_device_3.jpg| 250px]]<br> | ||

| + | |||

| + | 2. For Linux -</br> | ||

| + | a. [[FAQs#How to Stop OSSEC Agent using command line|Stop Ossec Agent]]<br/> | ||

| + | b. Remove / rename ossec directory ie. our Linux server. mv /opt/ossec /opt/ossec_bak <br/> | ||

| + | c. [[FAQs#How to Reinstall OSSEC Agent for Linux|Reinstall the ossec agent]].<br/> | ||

| + | d. [[FAQs#How to Start OSSEC Agent using command line|Start Osssec agent]]</br> | ||

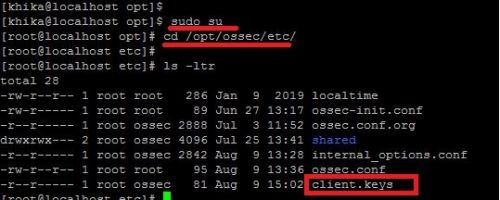

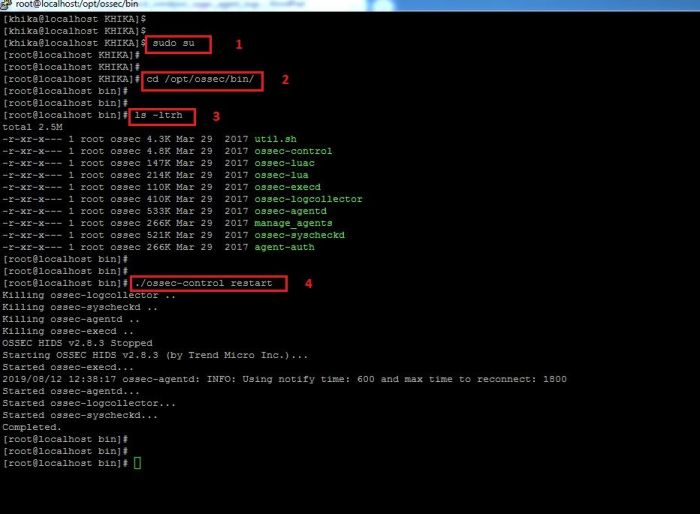

====Ossec issues in linux agent.==== | ====Ossec issues in linux agent.==== | ||

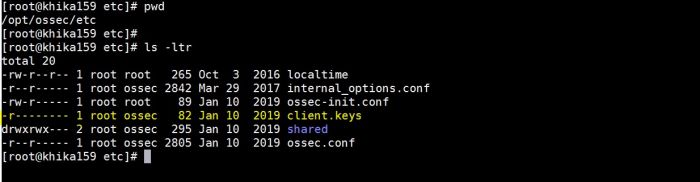

| − | 1. If you have logs similar to the following in '''/opt/ossec/logs/ossec.log'''.Click [[FAQs#How to check logs in Linux Ossec Agent | + | 1. If you have logs similar to the following in '''/opt/ossec/logs/ossec.log'''.Click [[FAQs#How to check logs in Linux Ossec Agent|here]] to check Linux ossec agent logs:<br> |

'''ERROR: Queue '/opt/ossec/queue/ossec/queue' not accessible: 'Connection refused'. Unable to access queue: '/var/ossec/queue/ossec/queue'. Giving up.''' | '''ERROR: Queue '/opt/ossec/queue/ossec/queue' not accessible: 'Connection refused'. Unable to access queue: '/var/ossec/queue/ossec/queue'. Giving up.''' | ||

| Line 256: | Line 393: | ||

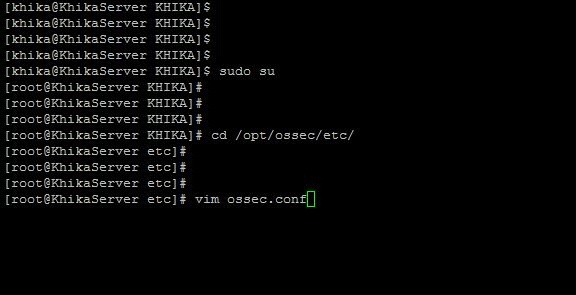

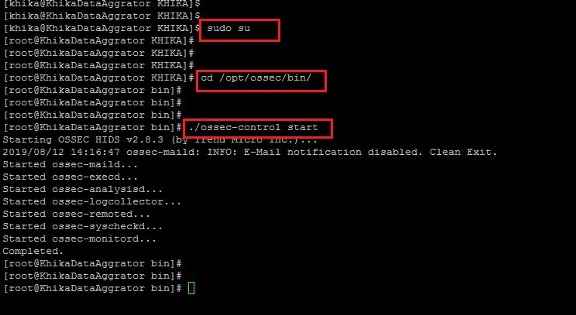

2. Set root user using '''sudo su''' command.<br> | 2. Set root user using '''sudo su''' command.<br> | ||

3. '''cd /opt/ossec/etc/'''<br> | 3. '''cd /opt/ossec/etc/'''<br> | ||

| − | [[File:Ossec_device8.jpg| | + | [[File:Ossec_device8.jpg|500px]]<br> |

4. '''chmod 440 client.keys'''<br> | 4. '''chmod 440 client.keys'''<br> | ||

5. '''chown root:ossec client.keys'''<br> | 5. '''chown root:ossec client.keys'''<br> | ||

| Line 262: | Line 399: | ||

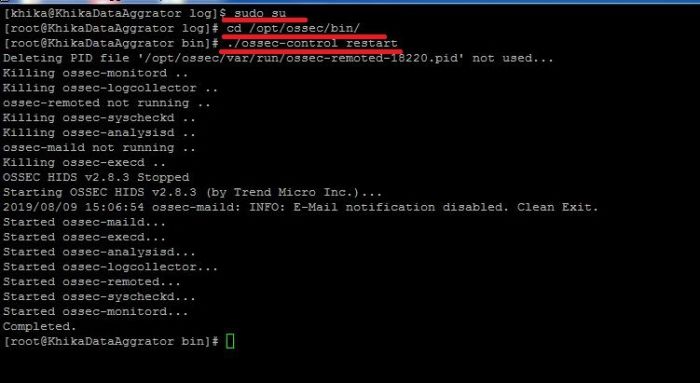

7. '''./ossec-control restart'''<br> | 7. '''./ossec-control restart'''<br> | ||

| − | 2. If you have logs similar to '''"ERROR: Authentication key file '/opt/ossec/etc/client.keys' not found."''' in '''/opt/ossec/logs/ossec.log'''.Click [[FAQs#How to check logs in Linux Ossec Agent | + | 2. If you have logs similar to '''"ERROR: Authentication key file '/opt/ossec/etc/client.keys' not found."''' in '''/opt/ossec/logs/ossec.log'''.Click [[FAQs#How to check logs in Linux Ossec Agent|here]] to check Linux ossec agent logs<br> |

This means the file '''client.keys is not available''' on path "'''/opt/ossec/etc/'''" | This means the file '''client.keys is not available''' on path "'''/opt/ossec/etc/'''" | ||

'''Resolution:'''<br> | '''Resolution:'''<br> | ||

| Line 274: | Line 411: | ||

[[File:Ossec_device9.jpg|700px]] | [[File:Ossec_device9.jpg|700px]] | ||

| − | 3. If you have logs similar to '''WARN: Process locked. Waiting for permission...''' in '''/opt/ossec/logs/ossec.log'''. Click [[FAQs#How to check logs in Linux Ossec Agent | + | 3. If you have logs similar to '''WARN: Process locked. Waiting for permission...''' in '''/opt/ossec/logs/ossec.log'''. Click [[FAQs#How to check logs in Linux Ossec Agent| here]] to check Linux ossec agent logs<br> |

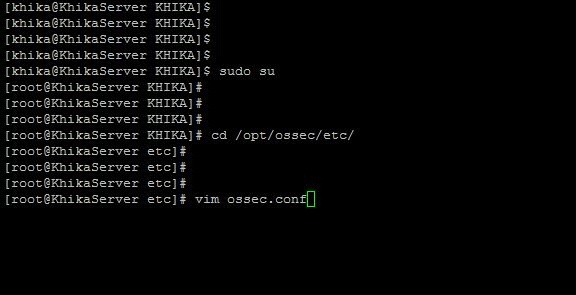

'''Case I: Wrong IP of Aggregator given while installing the agent.'''<br> | '''Case I: Wrong IP of Aggregator given while installing the agent.'''<br> | ||

'''Resolution:'''<br> | '''Resolution:'''<br> | ||

| Line 296: | Line 433: | ||

3. Check for the following line in this file and set the value to '''"0"'''<br> | 3. Check for the following line in this file and set the value to '''"0"'''<br> | ||

'''remoted.verify_msg_id=0'''<br> | '''remoted.verify_msg_id=0'''<br> | ||

| + | Check the following line is set to '''"1"'''<br> | ||

| + | '''logcollector.remote_commands=1'''<br> | ||

4. Close the editor after saving the changes<br> | 4. Close the editor after saving the changes<br> | ||

''':wq'''<br> | ''':wq'''<br> | ||

| Line 302: | Line 441: | ||

ii. '''./ossec-control restart'''<br> | ii. '''./ossec-control restart'''<br> | ||

6. Check if the problem is solved else try following steps:<br> | 6. Check if the problem is solved else try following steps:<br> | ||

| − | 1. [[FAQs#How to Stop OSSEC Server using command line | + | 1. [[FAQs#How to Stop OSSEC Server using command line| Stop ossec server process]]<br> |

| − | 2. [[FAQs#How to Stop OSSEC Agent using command line | + | 2. [[FAQs#How to Stop OSSEC Agent using command line| Stop Ossec agent process]]<br> |

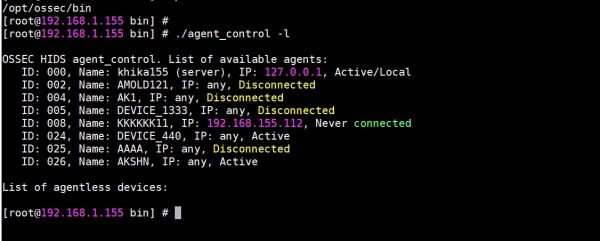

'''Note :''' know your agent id by firing command "'''agent_control -l'''" in '''/opt/ossec/bin''' directory. You will find your agents id by this command.<br> | '''Note :''' know your agent id by firing command "'''agent_control -l'''" in '''/opt/ossec/bin''' directory. You will find your agents id by this command.<br> | ||

3. Ossec Server Side Resolution<br> | 3. Ossec Server Side Resolution<br> | ||

| Line 314: | Line 453: | ||

ii. Go to the directory /opt/ossec/queue/rids/ using "'''cd /opt/ossec/queue/rids/'''" <br> | ii. Go to the directory /opt/ossec/queue/rids/ using "'''cd /opt/ossec/queue/rids/'''" <br> | ||

iii. type '''rm -rf *''' in this directory.<br> | iii. type '''rm -rf *''' in this directory.<br> | ||

| − | 5. [[FAQs#How to Start OSSEC Server using command line | + | 5. [[FAQs#How to Start OSSEC Server using command line| Start ossec server process]]<br> |

| − | 6. [[FAQs#How to Start OSSEC Agent using command line | + | 6. [[FAQs#How to Start OSSEC Agent using command line|Start Ossec agent process]]<br> |

====Ossec Issue on Windows Client Side.==== | ====Ossec Issue on Windows Client Side.==== | ||

| − | '''Note:''' We must install the Ossec agent on windows using Administrator(Local Admin). | + | '''Note:''' We must install the Ossec agent on windows using Administrator(Local Admin).<br> |

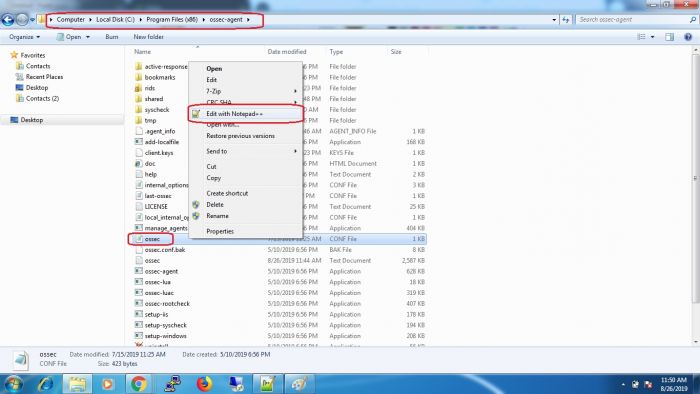

| − | 1. If you have logs similar to '''WARN: Process locked. Waiting for permission...''' in '''ossec.log'''.Click [[FAQs#How to check logs in Windows Ossec Agent | + | 1. If you have logs similar to '''WARN: Process locked. Waiting for permission...''' in '''ossec.log'''.Click [[FAQs#How to check logs in Windows Ossec Agent| here]] to check Windows ossec agent logs<br> |

'''Resolution:'''<br> | '''Resolution:'''<br> | ||

1. Login to OSSEC Agent and check the file "'''internal_options.conf'''" which is present in the directory '''"C:\Program Files (x86)\ossec-agent"''' and open it.<br> | 1. Login to OSSEC Agent and check the file "'''internal_options.conf'''" which is present in the directory '''"C:\Program Files (x86)\ossec-agent"''' and open it.<br> | ||

2. Check for the following line in this file and set the value to "0" and save it.<br> | 2. Check for the following line in this file and set the value to "0" and save it.<br> | ||

'''remoted.verify_msg_id=0'''<br> | '''remoted.verify_msg_id=0'''<br> | ||

| − | 3. Restart Ossec Agent<br> | + | Check the following line is set to '''"1"'''<br> |

| − | [[File:Windows_agent1.jpg | | + | '''logcollector.remote_commands=1'''<br> |

| + | 3. [[FAQs#How to Restart Windows Ossec Agent|Restart Ossec Agent]]<br> | ||

| + | [[File:Windows_agent1.jpg | 250px]]<br> | ||

4. Check if the problem is solved else try following steps<br> | 4. Check if the problem is solved else try following steps<br> | ||

| − | 1. Stop Ossec Server Process<br> | + | 1. [[FAQs#How to Stop OSSEC Server using command line|Stop Ossec Server Process]]<br> |

2. Stop Ossec Agent Process<br> | 2. Stop Ossec Agent Process<br> | ||

| + | [[File:Windows_device_11.jpg | 250px]] | ||

'''Note:''' know your agent id by firing command "'''agent_control -l'''" in /opt/ossec/bin directory. You will find your agents id by this command.<br> | '''Note:''' know your agent id by firing command "'''agent_control -l'''" in /opt/ossec/bin directory. You will find your agents id by this command.<br> | ||

3. Ossec Server Side Resolution<br> | 3. Ossec Server Side Resolution<br> | ||

| Line 340: | Line 482: | ||

[[File:Ossec device9.jpg| 700px]]<br> | [[File:Ossec device9.jpg| 700px]]<br> | ||

6. Start Ossec windows Agent.<br> | 6. Start Ossec windows Agent.<br> | ||

| − | [[File:Windows_device_3.jpg| | + | [[File:Windows_device_3.jpg| 250px]]<br> |

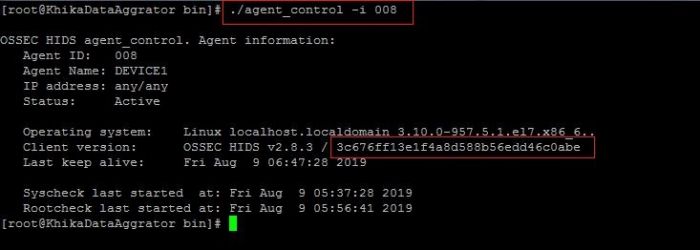

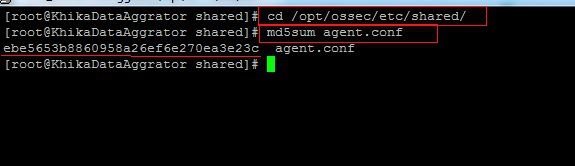

=== Data Collection Issue event if the agent is successfully connected to OSSEC Server.=== | === Data Collection Issue event if the agent is successfully connected to OSSEC Server.=== | ||

| Line 357: | Line 499: | ||

[[File:Windows_device_5.jpg | 700px]]<br> | [[File:Windows_device_5.jpg | 700px]]<br> | ||

6. Check If this md5sum matches with the checksum of your agent we noted earlier.<br> | 6. Check If this md5sum matches with the checksum of your agent we noted earlier.<br> | ||

| − | 7. restart | + | 7. If the md5sum do not match, restart the [[FAQs#How to Restart Windows Ossec Agent|Ossec Agent]] And the [[FAQs#How to Restart OSSEC Server|Ossec Server Process]].<br> |

====Auditing is not enabled on agent.==== | ====Auditing is not enabled on agent.==== | ||

| − | For windows | + | For windows server devices, KHIKA monitors windows security and system event logs. We must check if proper audit policies are configured at windows server so as to enable logging of events and integrate the events data with KHIKA.<br> |

| − | For linux related devices, KHIKA ossec agent fetches data from different types of files such as "'''/var/log/secure'''" , "'''/var/log/messages'''" , "'''/var/log/maillog'''" etc. Please check if Linux server is generating logs on the server itself.<br> | + | For linux related devices, KHIKA ossec agent fetches data from different types of files such as "'''/var/log/secure'''" , "'''/var/log/messages'''" , "'''/var/log/maillog'''" etc. Please check if Linux server is generating logs on the server itself (i.e. logs are not being forwarded to another server).<br> |

| − | If the problem persists, | + | If the problem persists, please reinstall the Ossec agent(Make sure you are root while installing on Linux and are administrator while installing on windows device.)<br> |

'''Note:''' If none of the above cases match your problem or does not solve the issue, Please try to reinstall the ossec agent. | '''Note:''' If none of the above cases match your problem or does not solve the issue, Please try to reinstall the ossec agent. | ||

1. [[FAQs#How to Reinstall OSSEC Agent for Windows | Reinstall Windows OSSEC Agent]]<br> | 1. [[FAQs#How to Reinstall OSSEC Agent for Windows | Reinstall Windows OSSEC Agent]]<br> | ||

2. [[FAQs#How to Reinstall OSSEC Agent for Linux | Reinstall Linux OSSEC Agent]] | 2. [[FAQs#How to Reinstall OSSEC Agent for Linux | Reinstall Linux OSSEC Agent]] | ||

| + | |||

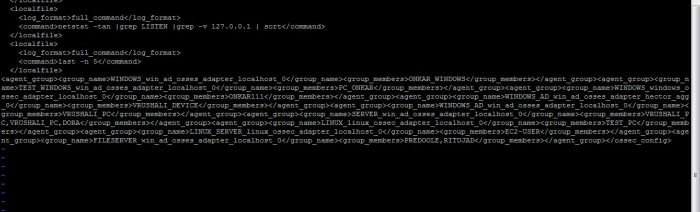

| + | ====Check if the ossec agent belongs to the correct ossec group in ossec.conf==== | ||

| + | When an ossec based device is added, it gets added to the list of devices that belong to an appropriate ossec group (with name as '<WORKSPACE>_<ADAPTER>_<AGGREGATOR>_<PREFIX>') in '''ossec.conf''' configuration file on the ossec server. The OSSEC Group defines the output file in which the logs received from OSSEC Agent are written on the Data Aggregator and parsed for the corresponding Adapter.<br> | ||

| + | |||

| + | If your device(TEST_DEVICE) is added under '''TEST_WINDOWS''' workspace and adapter to which it is added is '''win_ad_ossec_adapter''' and the adapter runs on node '''localhost''' then the ossec group name will as as given below.<br> '''TEST_WINDOWS_win_ad_osses_adapter_localhost_0'''<br> | ||

| + | |||

| + | Next we need to check if the device is added in the group members list as shown below.<br> | ||

| + | To check the configuration of file on ossec server, log on to the KHIKA DATA AGGREGATOR where ossec server is running. Please refer to the screenshot given below:<br> | ||

| + | [[File: Ossec_faq_profile_1.jpg| 700px]]<br> | ||

| + | <br> | ||

| + | [[File:Ossec_server_groups.JPG | 700px]]<br> | ||

| + | |||

| + | The record in ossec.conf for the device should be as shown below: <br> | ||

| + | '''<agent_group><group_name>TEST_WINDOWS_win_ad_osses_adapter_localhost_0</group_name><group_members>TEST_DEVICE</group_members></agent_group>'''<br> | ||

| + | |||

| + | This configuration will make sure that the logs which are receiving from TEST_DEVICE are getting stored in appropriate location.<br> In this case the logs for the device TEST_DEVICE will be stored in the directory '''/opt/ossec/logs/archives/<current_year>/<current_month>/TEST_WINDOWS_win_ad_osses_adapter_localhost_0''' directory.<br> | ||

| + | |||

| + | If not, please try restarting OSSEC Services.<br> | ||

| + | |||

| + | ====Check if profile is configured correctly on OSSEC Agent.==== | ||

| + | Agents can be grouped together in order to send them unique centralized configuration that is group specific. <br> | ||

| + | We can have different set of configurations for different groups of agents. '''agent.conf''' configuration file which is present on the ossec server(KHIKA DATA AGGREGATOR) has a defined configurations for agents which is represented by config_profile.<br> | ||

| + | config_profile Specifies the agent.conf profiles to be used by the agent.<br> | ||

| + | Make sure that your agent is using the correct '''config_profile''' for correct configuration.<br> | ||

| + | The config_profile is present in the '''ossec.conf''' file on agent.<br> | ||

| + | 1. open '''ossec.conf''' file on windows agent.<br> | ||

| + | log on to your windows server where ossec agent is installed and do the following to open the ossec.conf file.<br> | ||

| + | [[File:Open_ossec_conf_file_on_windows.jpg| 700px]]<br> | ||

| + | 2. open '''ossec.conf''' file on linux agent.<br> | ||

| + | log on to your linux server where ossec agent is installed and do the following to open the ossec.conf file.<br> | ||

| + | [[File:Ossec_faq_profile_1.jpg | 700px]]<br> | ||

| + | |||

| + | make sure that correct config_profile is added in you ossec agent.<br> | ||

| + | If your device is under windows critical servers group then make sure its ossec.conf file has critical_windows_servers as a config_profile so as to ensure that correct configuration is pushed to the agent.<br> | ||

| + | For example, ossec.conf file on your windows server should be something like this :<br> | ||

| + | <ossec_config> | ||

| + | <client> | ||

| + | <server-ip>x.x.x.x</server-ip> | ||

| + | <config-profile>windows_critical_servers</config-profile> | ||

| + | </client> | ||

| + | </ossec_config> | ||

=== Failing to Remove Ossec based device.=== | === Failing to Remove Ossec based device.=== | ||

| Line 379: | Line 562: | ||

[[File: Windows_device_7.jpg|700px]]<br> | [[File: Windows_device_7.jpg|700px]]<br> | ||

| − | If it shows that the aggregator is not connected to KHIKA Server, it means that you aggregator is not connected to KHIKA AppServer. | + | If it shows that the aggregator is not connected to KHIKA Server, it means that you aggregator is not connected to KHIKA AppServer.Click [[FAQs#Troubleshoot connection error between KHIKA appserver to Data Aggregator.|here]] to connect aggregator for our khika appserver troubleshooting |

===How to Find list of ossec agents along with it's status on command line=== | ===How to Find list of ossec agents along with it's status on command line=== | ||

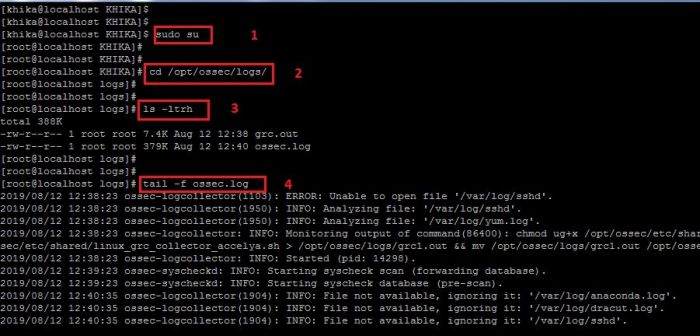

| Line 411: | Line 594: | ||

8. This is how you can check the logs of your ossec agent for troubleshooting.<br> | 8. This is how you can check the logs of your ossec agent for troubleshooting.<br> | ||

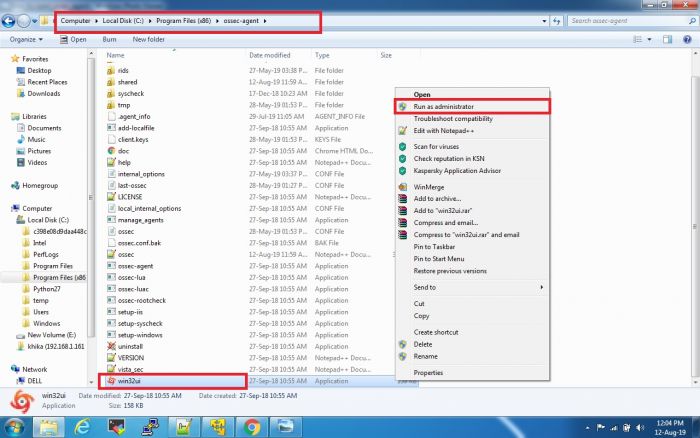

| − | ===How to check logs in Windows Ossec Agent | + | ===How to check logs in Windows Ossec Agent=== |

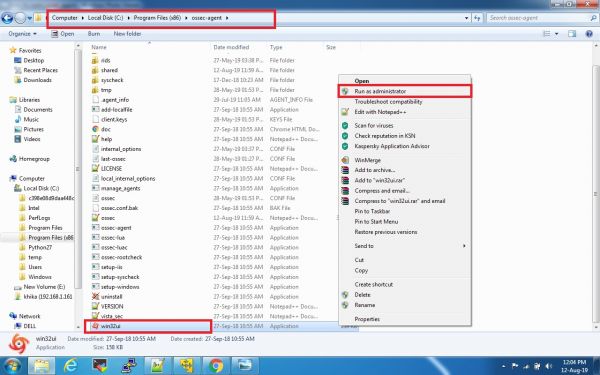

1. Open Manage Agent Application which is available in all programs or go to the following path: | 1. Open Manage Agent Application which is available in all programs or go to the following path: | ||

| − | '''C:\Program Files (x86)\ossec-agent''' | + | '''C:\Program Files (x86)\ossec-agent''' |

2. Search for '''win32ui''' in this directory and open it using Run as Administrator. <br> | 2. Search for '''win32ui''' in this directory and open it using Run as Administrator. <br> | ||

3. Please refer to the screenshot given below.<br> | 3. Please refer to the screenshot given below.<br> | ||

[[File:Win119.jpg|700px]] <br> | [[File:Win119.jpg|700px]] <br> | ||

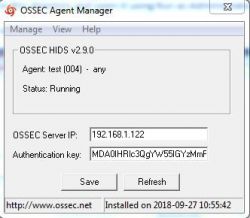

4. This will open a window as given below: <br> | 4. This will open a window as given below: <br> | ||

| − | [[File:Win120.jpg| | + | [[File:Win120.jpg|250px]]<br> |

5. Click on the view tab and then click on log to open the ossec agent's log file.<br> | 5. Click on the view tab and then click on log to open the ossec agent's log file.<br> | ||

'''Note''': This file is used for debugging the problem related to the connection with ossec server.<br> | '''Note''': This file is used for debugging the problem related to the connection with ossec server.<br> | ||

| − | [[File:Win128.jpg| | + | [[File:Win128.jpg|250px]]<br> |

6. This operation will open a windows ossec agent log which is used for debugging. | 6. This operation will open a windows ossec agent log which is used for debugging. | ||

| − | + | ===How to check OSSEC Server logs=== | |

| − | ===How to check OSSEC Server logs | ||

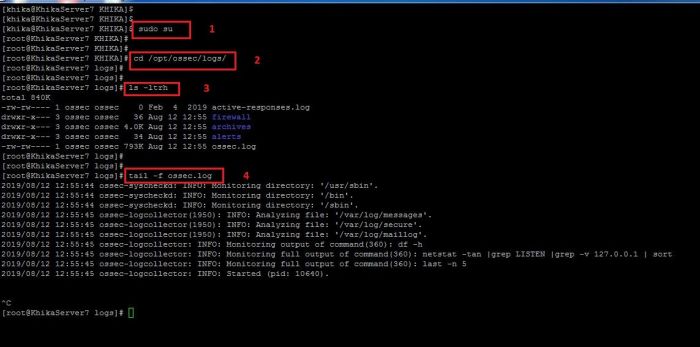

1. To check the logs of your ossec server which is installed on your KHIKA Aggregator for debugging, You must need to go to the following directory:<br> | 1. To check the logs of your ossec server which is installed on your KHIKA Aggregator for debugging, You must need to go to the following directory:<br> | ||

| Line 441: | Line 623: | ||

8. This is how you can check the ossec server-side logs.<br> | 8. This is how you can check the ossec server-side logs.<br> | ||

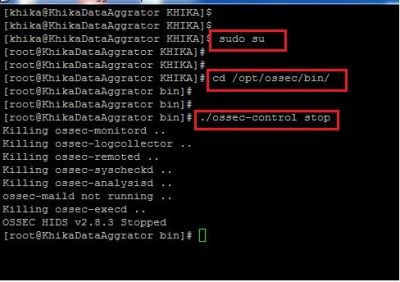

| − | + | ===How to Stop OSSEC Server using command line=== | |

| − | ===How to Stop OSSEC Server using command line | ||

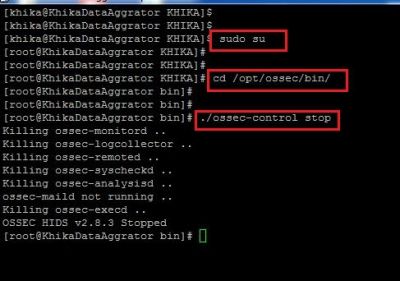

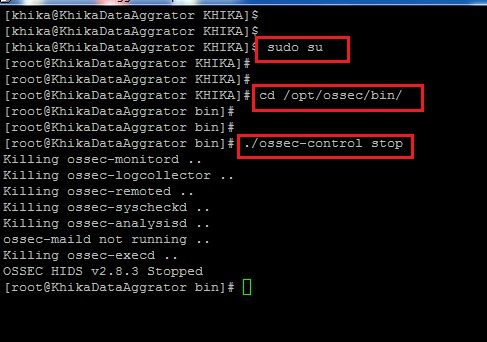

1. To Stop the linux OSSEC Server which is preconfigured on your KHIKA Aggregator you will have to go to the following directory:<br> | 1. To Stop the linux OSSEC Server which is preconfigured on your KHIKA Aggregator you will have to go to the following directory:<br> | ||

| Line 452: | Line 633: | ||

'''./ossec-control stop'''<br> | '''./ossec-control stop'''<br> | ||

6. Refer to the screenshot given below: <br> | 6. Refer to the screenshot given below: <br> | ||

| − | [[File:Win117.jpg| | + | [[File:Win117.jpg|400px]] <br> |

7. Your OSSEC Server is Stopped. | 7. Your OSSEC Server is Stopped. | ||

| − | + | ===How to Stop OSSEC Agent using command line=== | |

| − | ===How to Stop OSSEC Agent using command line | ||

1. To Stop the linux OSSEC Agent which is preconfigured on your Device which you want to monitor, you will have to go to the following directory:<br> | 1. To Stop the linux OSSEC Agent which is preconfigured on your Device which you want to monitor, you will have to go to the following directory:<br> | ||

| Line 469: | Line 649: | ||

7. Your OSSEC Agent is Stopped. | 7. Your OSSEC Agent is Stopped. | ||

| − | ===How to Start OSSEC Server using command line | + | ===How to Start OSSEC Server using command line=== |

1. To Start the linux OSSEC Server which is preconfigured on your KHIKA Aggregator you will have to go to the following directory:<br> | 1. To Start the linux OSSEC Server which is preconfigured on your KHIKA Aggregator you will have to go to the following directory:<br> | ||

'''/opt/ossec/bin''' <br> | '''/opt/ossec/bin''' <br> | ||

| Line 481: | Line 661: | ||

7. Your OSSEC Server is Started. | 7. Your OSSEC Server is Started. | ||

| − | ===How to Start OSSEC Agent using command line | + | ===How to Start OSSEC Agent using command line=== |

1. To Start the linux OSSEC Agent which is preconfigured on your KHIKA Aggregator you will have to go to the following directory:<br> | 1. To Start the linux OSSEC Agent which is preconfigured on your KHIKA Aggregator you will have to go to the following directory:<br> | ||

'''/opt/ossec/bin''' <br> | '''/opt/ossec/bin''' <br> | ||

| Line 501: | Line 681: | ||

[[File:Win123.jpg|600px]] <br> | [[File:Win123.jpg|600px]] <br> | ||

5. When Restart is done you will see a pop-up message similar to what is shown below:<br> | 5. When Restart is done you will see a pop-up message similar to what is shown below:<br> | ||

| − | [[File:Win124.jpg| | + | [[File:Win124.jpg|400px]] <br> |

6. This is how you can Restart the OSSEC Server using KHIKA GUI. | 6. This is how you can Restart the OSSEC Server using KHIKA GUI. | ||

| − | 7. If you get any error while reloading OSSEC Server, | + | 7. If you get any error while reloading OSSEC Server, To check if your Aggregator is connected to KHIKA AppServer click [[FAQs#How_to_check_status_of_KHIKA_Aggregator_i.e._Node_.3F|here]] |

| − | |||

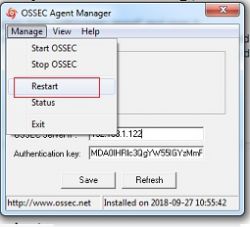

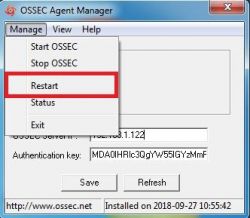

===How to Restart Windows Ossec Agent=== | ===How to Restart Windows Ossec Agent=== | ||

| − | 1. Open Manage Agent Application which is available in all programs or go to the following path: | + | 1. Open Manage Agent Application which is available in all programs or go to the following path:<br> |

| − | '''C:\Program Files (x86)\ossec-agent''' | + | '''C:\Program Files (x86)\ossec-agent'''<br> |

2. Search for '''win32ui''' in this directory and open it using Run as Administrator.<br> | 2. Search for '''win32ui''' in this directory and open it using Run as Administrator.<br> | ||

3. Please refer to the screenshot given below.<br> | 3. Please refer to the screenshot given below.<br> | ||

[[File:Win119.jpg|600px]] <br> | [[File:Win119.jpg|600px]] <br> | ||

| + | <br> | ||

4. This will open a window as given below: <br> | 4. This will open a window as given below: <br> | ||

| − | [[File:Win120.jpg| | + | [[File:Win120.jpg|250px]] <br> |

| + | <br> | ||

5. Click on Manage tab and then click the restart button to restart the ossec agent. <br> | 5. Click on Manage tab and then click the restart button to restart the ossec agent. <br> | ||

'''Note''' : We must open the Ossec Agent Application using run as administrator. <br> | '''Note''' : We must open the Ossec Agent Application using run as administrator. <br> | ||

| − | [[File:Win121.jpg| | + | [[File:Win121.jpg|250px]] <br> |

| + | <br> | ||

6. This operation will restart the windows ossec agent.You can refer to the below screenshot.<br> | 6. This operation will restart the windows ossec agent.You can refer to the below screenshot.<br> | ||

| − | [[File:Win122.jpg| | + | [[File:Win122.jpg|250px]] <br> |

7. Done | 7. Done | ||

| − | ==How to Restart Linux Ossec Agent== | + | ===How to Restart Linux Ossec Agent=== |

1. To restart the linux ossec agent installed on your linux you will have to go to the following directory:<br> | 1. To restart the linux ossec agent installed on your linux you will have to go to the following directory:<br> | ||

'''/opt/ossec/bin''' | '''/opt/ossec/bin''' | ||

| Line 531: | Line 713: | ||

6. Refer to the screenshot given below:<br> | 6. Refer to the screenshot given below:<br> | ||

[[File:Win125.jpg|700px]] <br> | [[File:Win125.jpg|700px]] <br> | ||

| + | <br> | ||

7. Your ossec agent is restarted. | 7. Your ossec agent is restarted. | ||

| + | |||

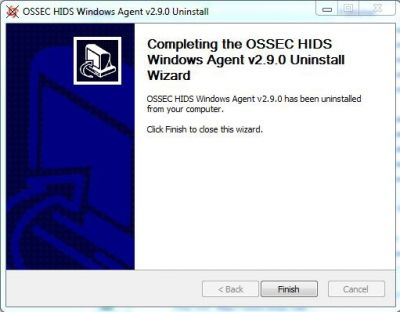

| + | ===How to Reinstall OSSEC Agent for Windows=== | ||

| + | |||

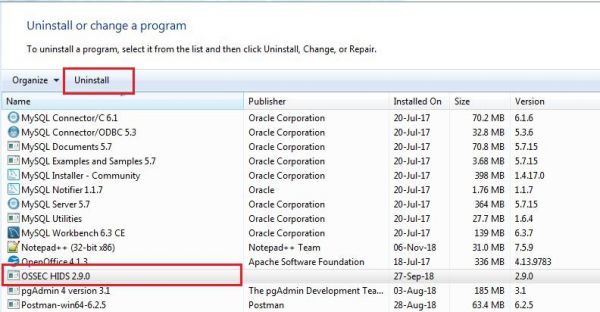

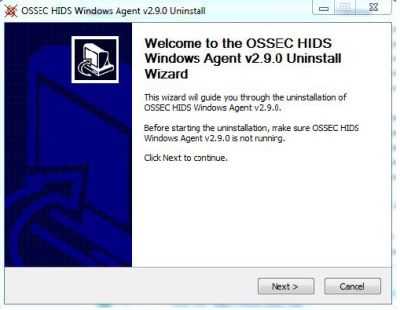

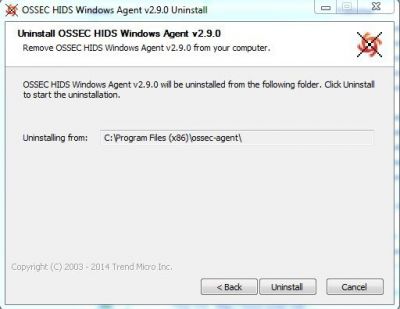

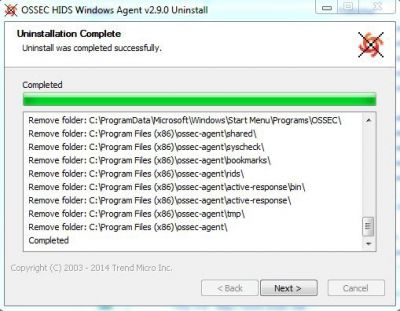

| + | 1. Go to the following path in your windows server: | ||

| + | '''Control Panel -> All Control Panel Items -> Programs and Features''' | ||

| + | 2. Select the OSSEC HIDS Application and then click on uninstall.<br> | ||

| + | [[File:Win111.jpg|600px]]<br> | ||

| + | 3.Follow the procedure of uninstallation. Please refer to the screenshots below:<br> | ||

| + | [[File:Win112.jpg|400px]]<br> | ||

| + | [[File:Win113.jpg|400px]]<br> | ||

| + | [[File:Win114.jpg|400px]]<br> | ||

| + | [[File:Win115.jpg|400px]] <br> | ||

| + | 4. Uninstallation of windows ossec agent is Done | ||

| + | 5. Now we will install the OSSEC Agent once again. | ||

| + | '''Note''': Please make sure you use an administrator account to install the OSSEC Agent. | ||

| + | 6.[[Getting Data into KHIKA#Installing OSSEC Agent for Windows|Install ossec agent for Windows]] | ||

| + | |||

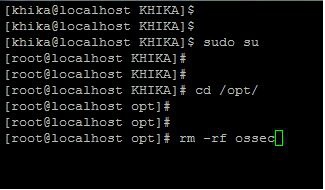

| + | ===How to Reinstall OSSEC Agent for Linux=== | ||

| + | |||

| + | 1. To reinstall the ossec agent for linux, We must first uninstall the ossec agent which is already present on your linux server. | ||

| + | 2. Login to your Ossec Agent. | ||

| + | 3. Fire '''sudo su''' command to enter into '''root'''. | ||

| + | 4. Before proceeding to the uninstallation, make sure you stop the ossec agent. | ||

| + | 5. Go to the following directory: | ||

| + | 6. '''/opt/ossec/bin''' | ||

| + | 7. Fire '''./ossec-control stop''' command to stop the agent. <br> | ||

| + | 8.[[File:Ossec11.jpg|400px]] <br> | ||

| + | 9. Go to the directory where ossec agent gets installed, '''(/opt)''' | ||

| + | 10. Type following command | ||

| + | 11. '''cd /opt''' | ||

| + | 12. Remove the ossec directory using following command. | ||

| + | 13. '''rm -rf ossec/''' <br> | ||

| + | [[File:Ossec121.jpg|400px]] <br> | ||

| + | 14. Now proceed with installing the ossec agent again. | ||

| + | 15. [[Getting Data into KHIKA#Installing OSSEC Agent for Linux|Install ossec agent for Linux]] | ||

== KHIKA Disk Management and Issues == | == KHIKA Disk Management and Issues == | ||

In KHIKA there are generally three kinds of partitions<br> | In KHIKA there are generally three kinds of partitions<br> | ||

1. '''root''' (/) partition which generally contains appserver + data. <br> | 1. '''root''' (/) partition which generally contains appserver + data. <br> | ||

| − | 2. '''Data''' (/data) partition contains index data which include raw, reports and alerts.<br> | + | 2. '''Data''' (/data) partition contains index data which include raw data indices, reports and alerts.<br> |

3. '''Cold/Offline data''' (/offline) partition which is generally NFS mounted partition.<br> | 3. '''Cold/Offline data''' (/offline) partition which is generally NFS mounted partition.<br> | ||

And this type of partition contains offline i.e. archival data which is not searchable.<br> | And this type of partition contains offline i.e. archival data which is not searchable.<br> | ||

| Line 546: | Line 764: | ||

above command will give directory wise space usage summary. | above command will give directory wise space usage summary. | ||

| − | + | === Most probable reasons why Disk is Full=== | |

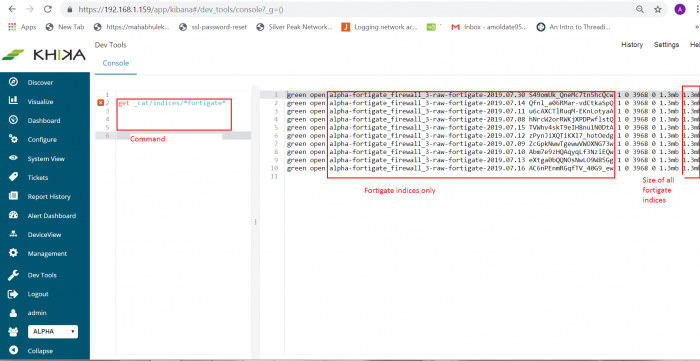

#[[FAQs#Size of indexes representing raw logs grows too much|Size of indexes, representing raw logs grows too much.]] | #[[FAQs#Size of indexes representing raw logs grows too much|Size of indexes, representing raw logs grows too much.]] | ||

#[[FAQs#Log files of KHIKA processes does not get deleted|Log files of KHIKA processes does not get deleted]] (log files of KHIKA processes are huge) | #[[FAQs#Log files of KHIKA processes does not get deleted|Log files of KHIKA processes does not get deleted]] (log files of KHIKA processes are huge) | ||

| Line 571: | Line 789: | ||

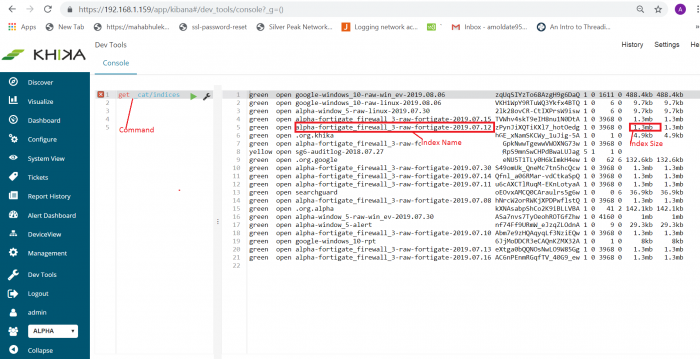

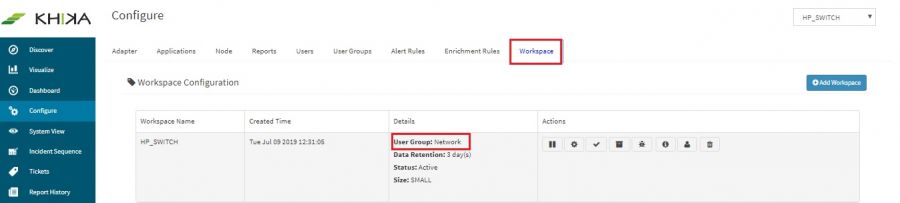

====If you find that disk space is utilized due to raw indices==== | ====If you find that disk space is utilized due to raw indices==== | ||

| − | 1. Make sure that the data retention period (TTL) is reasonable. | + | 1. Make sure that the data retention period (TTL) is reasonable. You can check it by going to "Workspace" settings and modify TTL if required. |

| − | + | Go to "Workspace" tab from "Configure" in left menu and modify it if required. | |

'''configure -> Modify this workspace -> Data Retention -> Add required data retention ->save''' | '''configure -> Modify this workspace -> Data Retention -> Add required data retention ->save''' | ||

| − | 2. Archive using snapshot archival utility( kindly refer steps how to configure it). Note that Archival needs space on the cold-data destination. | + | 2. Archive some data using snapshot archival utility from this current partition into cold data( kindly refer steps how to configure it). Note that Archival needs space on the cold-data destination. |

| − | 3. If there is no | + | 3. If there is no option to free disk space then delete old large indices.Let say if you want to delete index “'''alpha-fortigate_firewall_3-raw-fortigate-2019.07.30'''” then use the following command in dev tools (You must be a KHIKA Admin ) |

i. '''POST alpha-fortigate_firewall_3-raw-fortigate-2019.07.17/_close''' | i. '''POST alpha-fortigate_firewall_3-raw-fortigate-2019.07.17/_close''' | ||

ii. '''DELETE alpha-fortigate_firewall_3-raw-fortigate-2019.07.17 | ii. '''DELETE alpha-fortigate_firewall_3-raw-fortigate-2019.07.17 | ||

''' | ''' | ||

| − | ===Log files of KHIKA processes | + | |

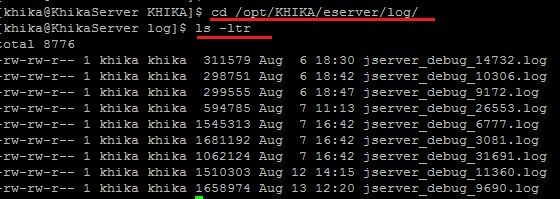

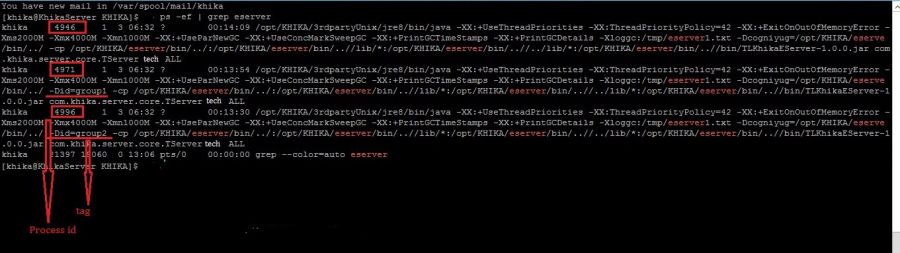

| − | If you found process log files | + | ===Log files of KHIKA processes not deleted=== |

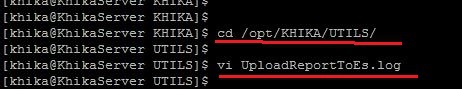

| + | If you found process log files are not getting deleted :<br> | ||

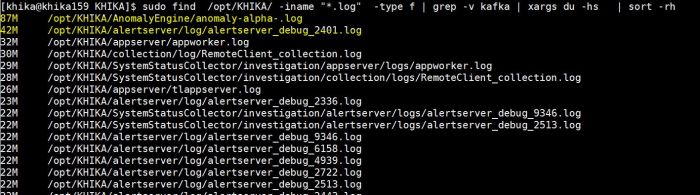

1. Use following command to find out disk usage of log files. Log files stored as *.log extention. | 1. Use following command to find out disk usage of log files. Log files stored as *.log extention. | ||

'''sudo find /opt/KHIKA/ -iname "*.log" -type f | grep -v kafka | xargs du -hs | sort -rh''' | '''sudo find /opt/KHIKA/ -iname "*.log" -type f | grep -v kafka | xargs du -hs | sort -rh''' | ||

| Line 593: | Line 812: | ||

'''rm -rf /opt/KHIKA/alertserver/log/alertserver_debug_2336.log''' | '''rm -rf /opt/KHIKA/alertserver/log/alertserver_debug_2336.log''' | ||

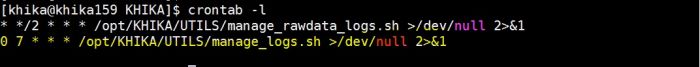

| − | 3. Make | + | 3. Make sure log file clean up cronjob is working (/opt/KHIKA/UTILS/manage_logs.sh)<br> |

| − | + | To check cronjob use following command | |

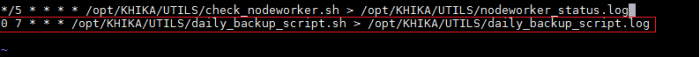

'''# crontab -l''', this will give output as follow. | '''# crontab -l''', this will give output as follow. | ||

[[File: Crontab.jpg | 700px]] | [[File: Crontab.jpg | 700px]] | ||

<br> | <br> | ||

| − | Here clean up cron job | + | Here, the clean-up cron job is configured every day at 7 am. |

| − | 4. If any directory entry is missing from clean up cronjob then add it into "/opt/KHIKA/UTILS/manage_logs.sh" <br> | + | 4. If any directory entry is missing from clean-up cronjob then add it into "/opt/KHIKA/UTILS/manage_logs.sh" <br> |

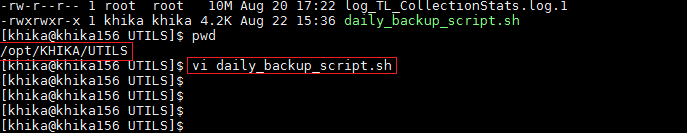

Steps to add missing entry | Steps to add missing entry | ||

| Line 611: | Line 830: | ||

[[File:Save_cron_job.JPG|700ps]]<br> | [[File:Save_cron_job.JPG|700ps]]<br> | ||

<br> | <br> | ||

| − | 5. On aggregator node | + | 5. On aggregator node make sure following properties is set to "'''false'''" in "'''/opt/KHIKA/collection/bin/Cogniyug.properties'''" file. |

'''remote.dontdeletefiles = false''' | '''remote.dontdeletefiles = false''' | ||

| − | + | Open file '''opt/KHIKA/collection/bin/Cogniyug.properties''' using common editor like '''vi/vim''' , add property and then save and exit.<br> | |

If property “'''remote.dontdeletefiles'''” is not set to “'''false'''”, Aggregator will create '''.out''' and '''.done''' file in directory “'''/opt/KHIKA/collection/Collection'''” and “'''/opt/KHIKA/collection/MCollection'''” and will never delete it. This will eat up space on aggregator. Setting property to false will delete the .out and .done files | If property “'''remote.dontdeletefiles'''” is not set to “'''false'''”, Aggregator will create '''.out''' and '''.done''' file in directory “'''/opt/KHIKA/collection/Collection'''” and “'''/opt/KHIKA/collection/MCollection'''” and will never delete it. This will eat up space on aggregator. Setting property to false will delete the .out and .done files | ||

| − | ===Postgres database size | + | ===Postgres database size has increased === |

| − | Using a utility like '''du -csh''', | + | Using a utility like '''du -csh''', if you find Postgres data directory('''/opt/KHIKA/pgsql/data''') is taking more space then find out which table is taking more space using following steps : |

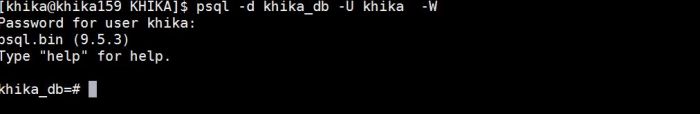

| − | 1. To execute SQL command you will need access of PostgreSQL console. To get access of PostgreSQL use following | + | 1. To execute SQL command, you will need access of PostgreSQL console. To get access of PostgreSQL use following commands in order shown : |

• '''. /opt/KHIKA/env.sh''' | • '''. /opt/KHIKA/env.sh''' | ||

• '''psql -d khika_db -U khika -W''' | • '''psql -d khika_db -U khika -W''' | ||

| − | • after | + | • after entering above command it will prompt for password .Enter the password |

[[File:Db_access.JPG| 700px]]<br> | [[File:Db_access.JPG| 700px]]<br> | ||

| Line 628: | Line 847: | ||

'''SELECT relname as "Table", pg_size_pretty(pg_total_relation_size(relid)) As "Size", pg_size_pretty(pg_total_relation_size(relid) - pg_relation_size(relid)) as "External Size" FROM pg_catalog.pg_statio_user_tables ORDER BY pg_total_relation_size(relid) DESC limit 10;''' | '''SELECT relname as "Table", pg_size_pretty(pg_total_relation_size(relid)) As "Size", pg_size_pretty(pg_total_relation_size(relid) - pg_relation_size(relid)) as "External Size" FROM pg_catalog.pg_statio_user_tables ORDER BY pg_total_relation_size(relid) DESC limit 10;''' | ||

| − | Above SQL command will return top 10 | + | Above SQL command will return top 10 tables which are occupying the most disk size. Generally but not necessarily, it will return the following tables.<br> |

• '''collection_statistics '''<br> | • '''collection_statistics '''<br> | ||

• '''collection_samples''' <br> | • '''collection_samples''' <br> | ||

| Line 635: | Line 854: | ||

• and '''report related tables''' <br> | • and '''report related tables''' <br> | ||

| − | 3. | + | 3. Lets say if you found that '''collection_statistics''' table is taking more space, then delete data from a table from which is less than the 2018 year's and Use SQL command |

'''delete from collection_statistics where date_hour_str <= '2018-12-31';''' | '''delete from collection_statistics where date_hour_str <= '2018-12-31';''' | ||

OR, if you want to delete from '''collection_samples''' table then use the following command | OR, if you want to delete from '''collection_samples''' table then use the following command | ||

| Line 649: | Line 868: | ||

NOTE: if you found any other tables which are not in step (2) then contact an administrator. | NOTE: if you found any other tables which are not in step (2) then contact an administrator. | ||

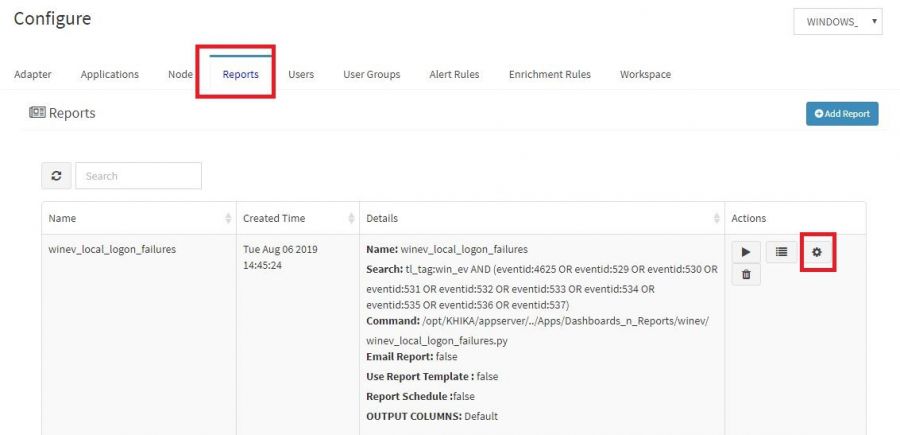

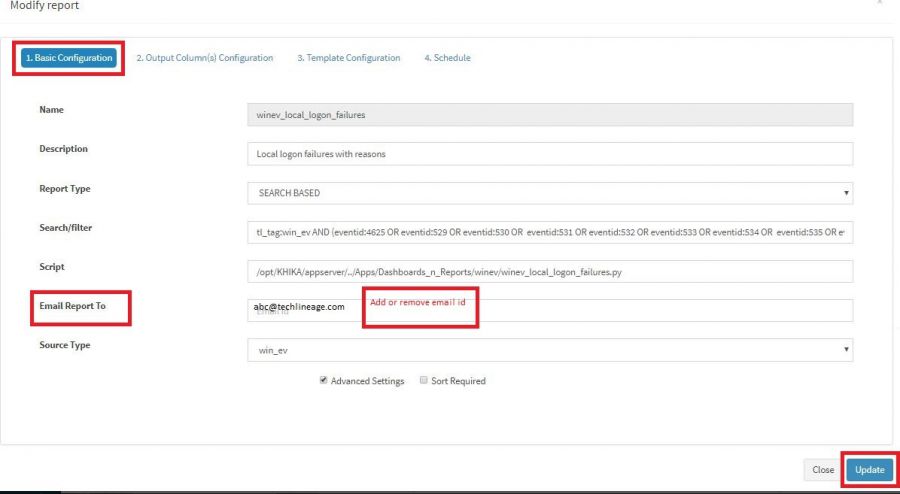

| − | ===Report's files | + | ===Report's files not getting archived=== |

| + | |||

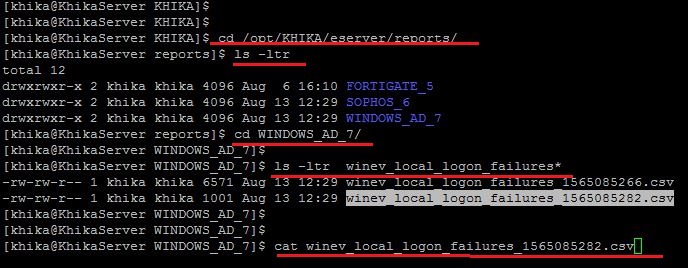

Reports CSV files get stored at location "'''/opt/KHIKA/appserver/reports'''" and "'''/opt/KHIKA/eserver/reports'''" | Reports CSV files get stored at location "'''/opt/KHIKA/appserver/reports'''" and "'''/opt/KHIKA/eserver/reports'''" | ||

| − | If you found that above mentioned directories taking more space | + | If you found that above mentioned directories are taking more space, then do following steps |

| − | 1. Make sure archival cron is configured for reports ('''/opt/KHIKA/UTILS/manage_logs.sh''')Use following command to check | + | 1. Make sure the archival cron is configured for reports ('''/opt/KHIKA/UTILS/manage_logs.sh'''). Use following command to check |

'''crontab -l''' | '''crontab -l''' | ||

[[File: Crontab.jpg | 700px]]<br> | [[File: Crontab.jpg | 700px]]<br> | ||

| − | 2. Make sure the following entries | + | 2. Make sure the following entries are present in file '''/opt/KHIKA/UTILS/manage_logs.sh''' |

• '''find /opt/KHIKA/appserver/reports -mtime +7 -type f | xargs gzip'''<br> | • '''find /opt/KHIKA/appserver/reports -mtime +7 -type f | xargs gzip'''<br> | ||

• '''find /opt/KHIKA/tserver/reports -mtime +7 -type f | xargs gzip'''<br> | • '''find /opt/KHIKA/tserver/reports -mtime +7 -type f | xargs gzip'''<br> | ||

| Line 663: | Line 883: | ||

If above entry is missing then add it using common editor like vi or vim. | If above entry is missing then add it using common editor like vi or vim. | ||

| − | 3. If reports | + | 3. If reports are too old and there is no option to free disk space then delete the reports. Use the following commands to delete report files which are older than 1 year |

• '''find /opt/KHIKA/appserver/reports -type f -mtime +365 -delete'''<br> | • '''find /opt/KHIKA/appserver/reports -type f -mtime +365 -delete'''<br> | ||

• '''find /opt/KHIKA/tserver/reports -type f -mtime +365 -delete'''<br> | • '''find /opt/KHIKA/tserver/reports -type f -mtime +365 -delete'''<br> | ||

• '''find /opt/KHIKA/eserver/reports -type f -mtime +365 -delete'''<br> | • '''find /opt/KHIKA/eserver/reports -type f -mtime +365 -delete'''<br> | ||

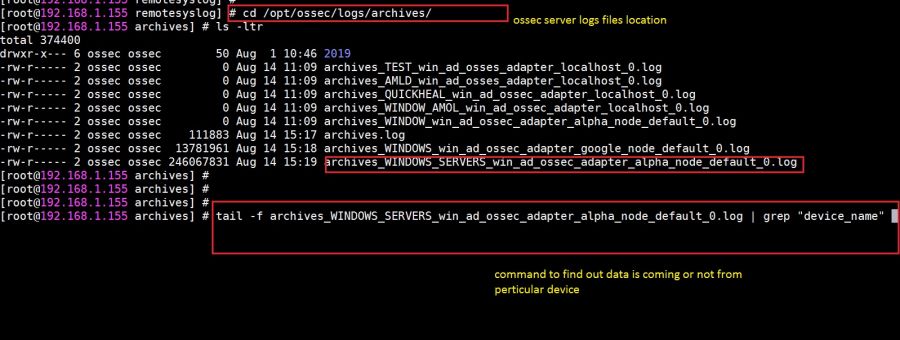

| − | ===Raw log files | + | ===Raw log files not getting archived and deleted for ossec and syslog devices=== |

On Aggregator node, Raw logs are stored at "'''/opt/ossec/logs/archives'''" for Ossec devices and "'''/opt/remotesyslog'''" for Syslog devices. | On Aggregator node, Raw logs are stored at "'''/opt/ossec/logs/archives'''" for Ossec devices and "'''/opt/remotesyslog'''" for Syslog devices. | ||

On Aggregator by default we keep raw logs only for three days. | On Aggregator by default we keep raw logs only for three days. | ||

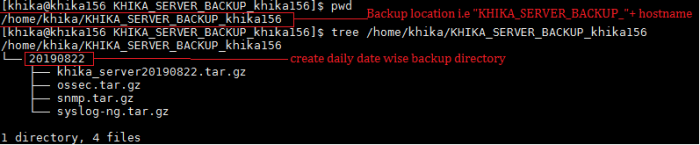

| − | If you find raw logs more than three days then delete | + | If you find raw logs more than three days, then delete them and configure cron job for the same. Add following cronjob "'''/opt/KHIKA/UTILS/manage_rawdata_logs.sh'''" |

| − | Steps to add a cronjob | + | Steps to add a cronjob : |

1. login as user '''khika''' on KHIKA Aggregator server. | 1. login as user '''khika''' on KHIKA Aggregator server. | ||

2. Enter '''crontab -e''' command. | 2. Enter '''crontab -e''' command. | ||

| Line 683: | Line 903: | ||

===Cold/Offline storage partition gets full or unmounted=== | ===Cold/Offline storage partition gets full or unmounted=== | ||

| + | |||

====If cold/offline storage partition gets full==== | ====If cold/offline storage partition gets full==== | ||

Every organization keeps cold data according to their data retention policy (1 year, 2 years, 420 days, etc). If there is data which is more than organization policy data retention period then delete it. | Every organization keeps cold data according to their data retention policy (1 year, 2 years, 420 days, etc). If there is data which is more than organization policy data retention period then delete it. | ||

| Line 700: | Line 921: | ||

2. Contact server administrator to mount offline storage | 2. Contact server administrator to mount offline storage | ||

| − | ===Elasticsearch snapshot | + | ===Elasticsearch snapshot utility not working properly=== |

| − | + | Elasticsearch Snapshot utility raises an alert when it fails to snapshot. | |

====Alert status is "archival_process_stuck"==== | ====Alert status is "archival_process_stuck"==== | ||

| − | Alert status message "archival_process_stuck" indicates that the process is taking more than 24 hours for a single bucket. This may happen due to a script | + | |

| − | + | Alert status message "archival_process_stuck" indicates that the process is taking more than 24 hours for a single bucket. This may happen due to a script terminated abnormally or compression operation taking more time. Check logs to find the issue. | |

| + | Find the current state of recent archival and change it accordingly. | ||

To change the current state of archival you will need PostgreSQL access use following command | To change the current state of archival you will need PostgreSQL access use following command | ||

To get access of PostgreSQL<br> | To get access of PostgreSQL<br> | ||

| Line 711: | Line 933: | ||

2. '''psql -d khika_db -U khika -W''' | 2. '''psql -d khika_db -U khika -W''' | ||

[[File:Db_access.JPG| 700px]]<br> | [[File:Db_access.JPG| 700px]]<br> | ||

| − | 3. After | + | 3. After entering above command it will prompt for password .Enter the password. |

1. If archival bucket state is "COMPRESSING", "COMPRESSING_FAILED", then make its state as "SUCCESS" use following SQL command<br> | 1. If archival bucket state is "COMPRESSING", "COMPRESSING_FAILED", then make its state as "SUCCESS" use following SQL command<br> | ||

| Line 759: | Line 981: | ||

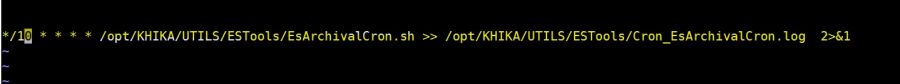

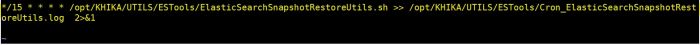

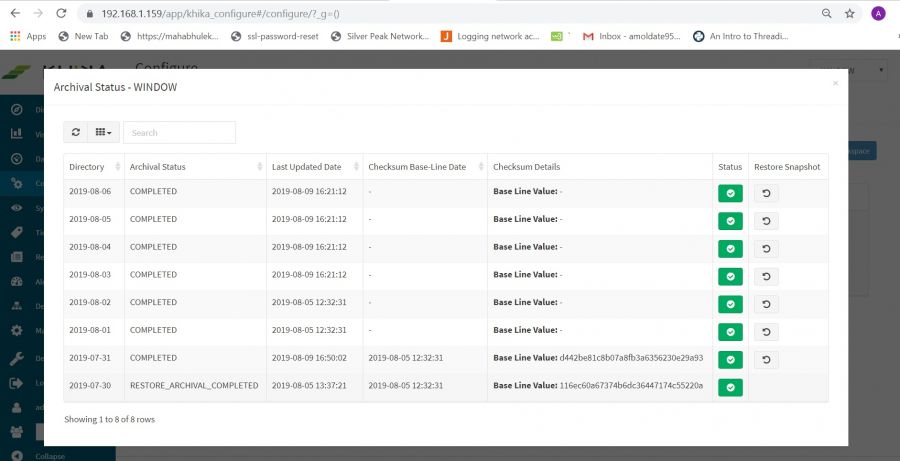

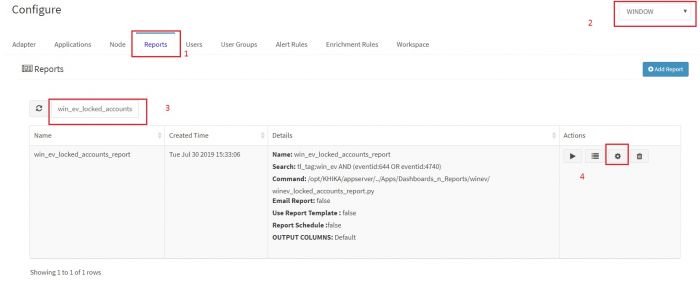

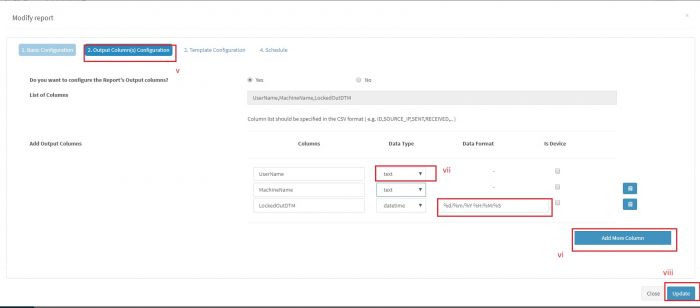

==Elasticsearch Snapshot functionality configuration== | ==Elasticsearch Snapshot functionality configuration== | ||

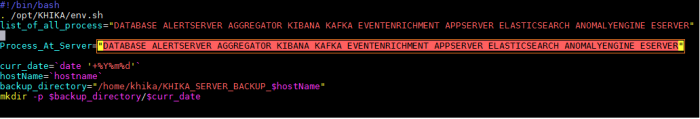

| − | Elastisearch snapshot functionality is nothing but data archival functionality. | + | Elastisearch snapshot functionality is nothing but data archival functionality.<br> |

| − | '''Configuration''' | + | |

| − | To | + | '''Configuration:''' |

| − | + | To setup snapshot /restore functionality you need to configure following things | |

| − | + | # ElasticSearchSnapshotRestoreUtils.sh | |

| − | + | # EsArchivalCron.sh | |

| − | + | # TLHookCat.py | |

| + | # elasticsearch_archival_process_failed alert | ||

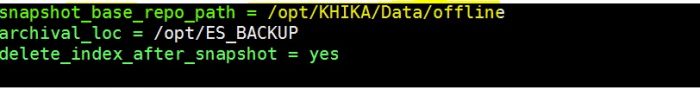

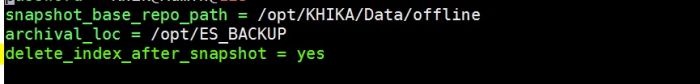

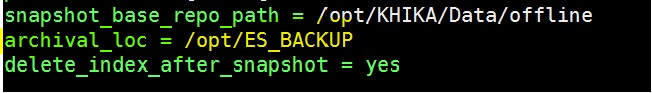

===Configuration of ElasticSearchSnapshotRestoreUtils.sh=== | ===Configuration of ElasticSearchSnapshotRestoreUtils.sh=== | ||

| − | Functionality of '''ElasticSearchSnapshotRestoreUtils.sh''' is to take snapshot | + | Functionality of '''ElasticSearchSnapshotRestoreUtils.sh''' is to take snapshot according to the “'''Time to Live'''” ( TTL ) setting of the workspace and restore the snapshot as and when necessary. <br> |

To configure “ElasticSearchSnapshotRestoreUtils.sh” you need to set the following properties<br> | To configure “ElasticSearchSnapshotRestoreUtils.sh” you need to set the following properties<br> | ||

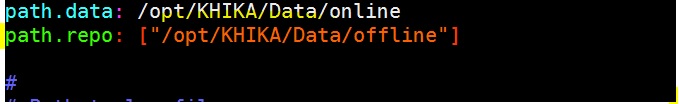

1. '''path.repo'''<br> | 1. '''path.repo'''<br> | ||

Need to put this property in elasticsearch configuration file “'''/opt/KHIKA/elasticsearch/config/elasticsearch.yml'''”<br> | Need to put this property in elasticsearch configuration file “'''/opt/KHIKA/elasticsearch/config/elasticsearch.yml'''”<br> | ||

| − | Use a common editor like vim/vi to edit the configuration file (see below screenshot) | + | Use a common editor like vim/vi to edit the configuration file (see below screenshot) <br> |

[[File:Elastic1.jpg| 700px]]<br> | [[File:Elastic1.jpg| 700px]]<br> | ||

here '''path.repo''' is “'''/opt/KHIKA/Data/offline'''”<br> | here '''path.repo''' is “'''/opt/KHIKA/Data/offline'''”<br> | ||

| Line 966: | Line 1,189: | ||

====What to do if something goes wrong for snapshot restore functionality==== | ====What to do if something goes wrong for snapshot restore functionality==== | ||

| − | Elasticsearch Snapshot utility raises an alert when it fails to take a snapshot. | + | Elasticsearch Snapshot utility raises an alert when it fails to take a snapshot. For problems related to snapshot restore functionality please check [[FAQs#Elasticsearch snapshot archival utility not working properly| here]]. |

| + | |||

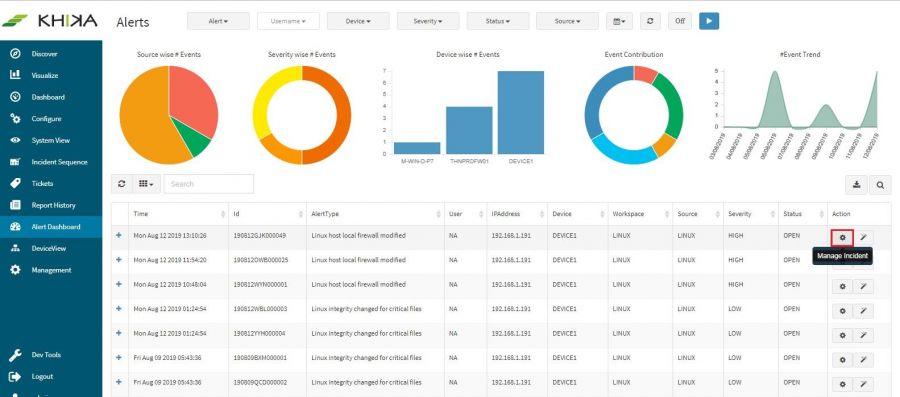

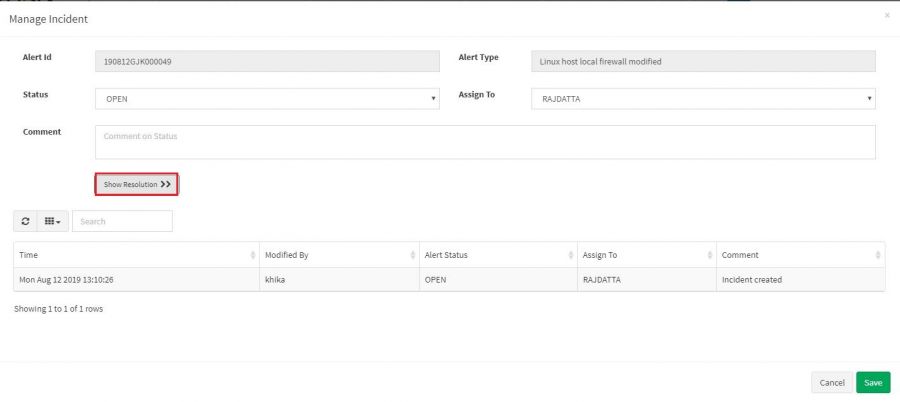

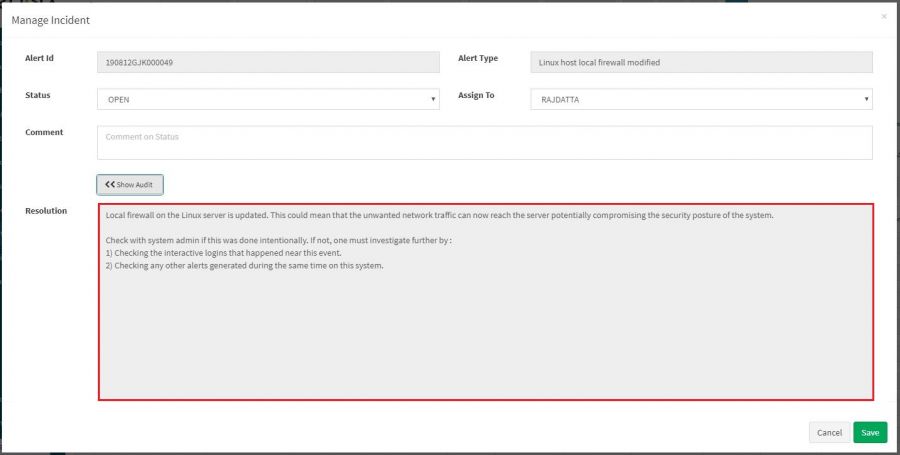

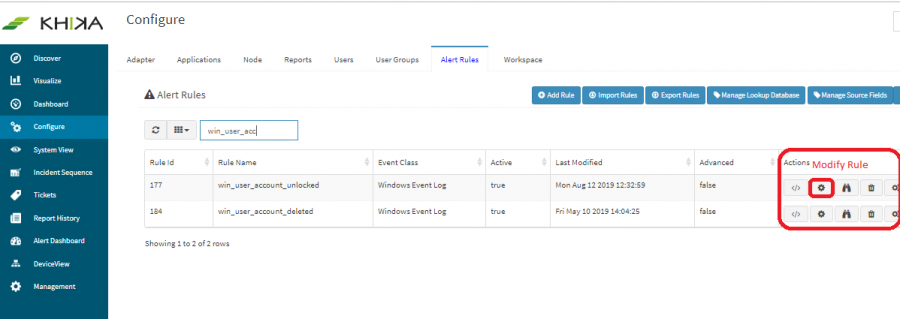

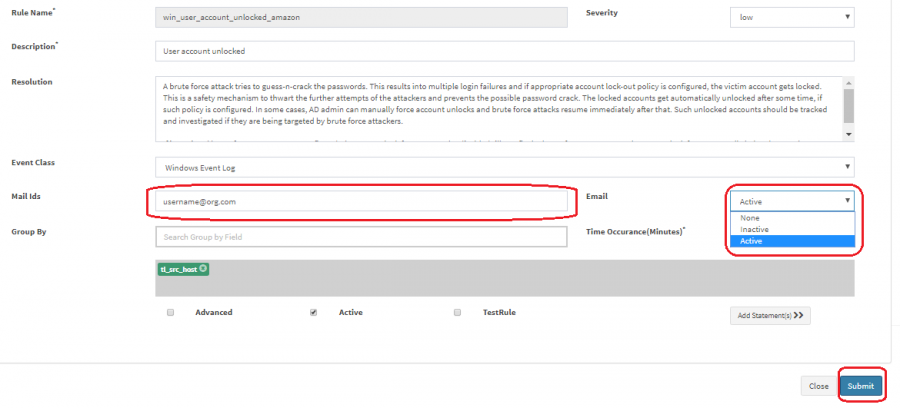

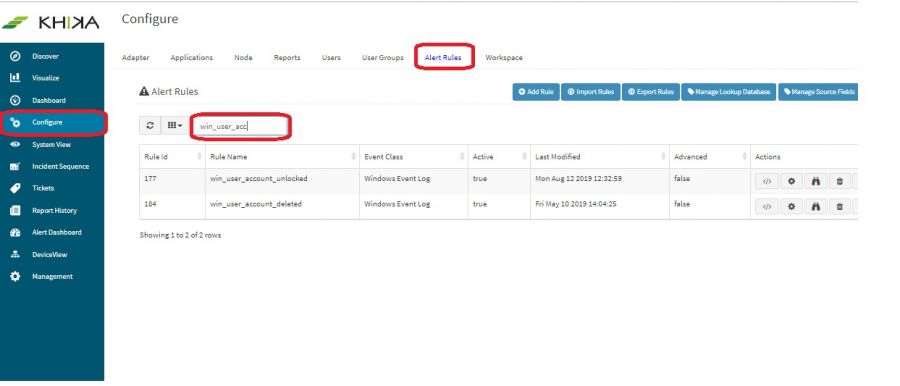

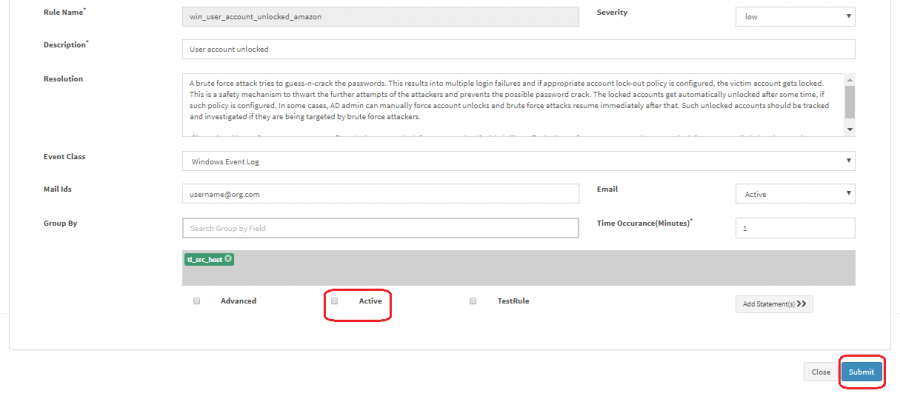

| + | ==Alerts in KHIKA== | ||

| + | ===What to do when an alert is triggered=== | ||

| + | We have resolutions written for each rule and it gives the possible action that should be taken by the concern team. Refer the following screenshots. | ||

| + | [[File:Alert_faq_7.JPG | 900px]]<br> | ||

| + | [[File:Alert_faq_8.JPG| 900px]] | ||

| + | [[File:Alert_faq_9.JPG| 900px]] | ||

| + | |||

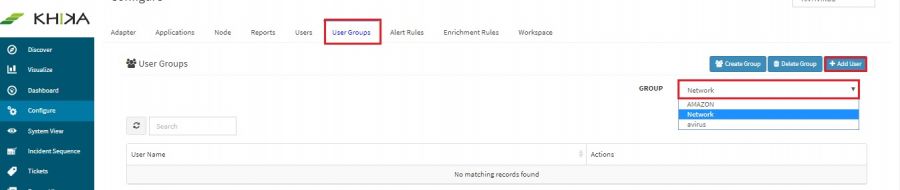

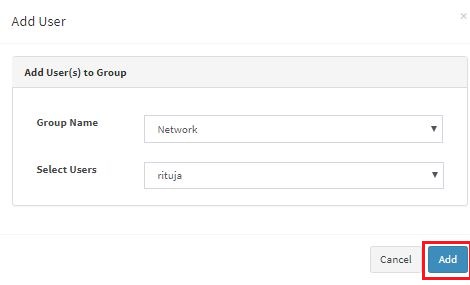

| + | ===How to provide access to alerts for a workspace to User?=== | ||

| + | For a given workspace, alerts can be viewed only by the users who are part of the User Group associated with the workspace. Hence to grant a User access to alerts, the User needs to be added to the User Group associated with the Workspace. | ||

| + | |||

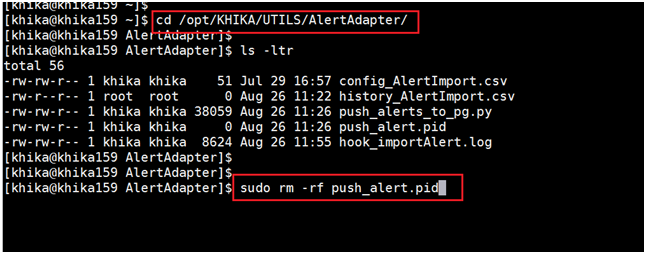

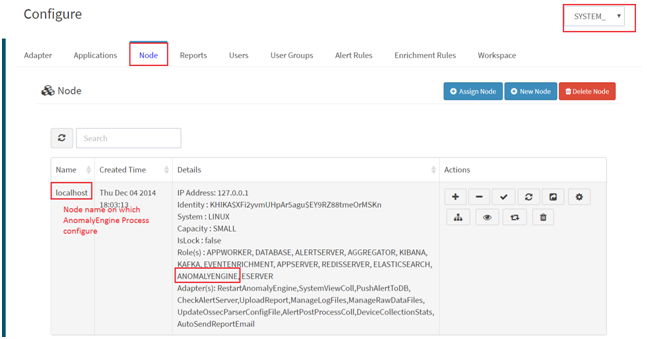

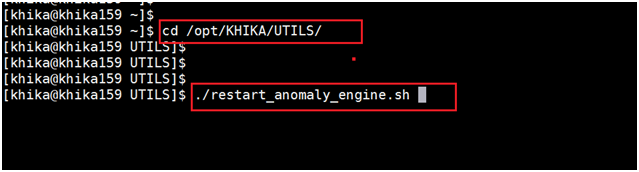

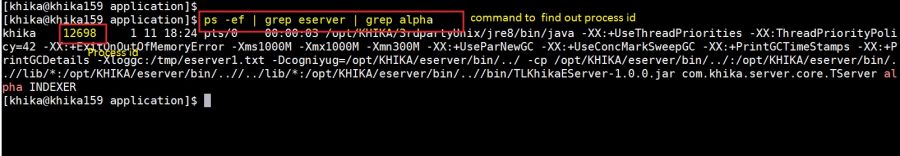

| + | ===What to do alerts are not visible on the Alert Dashboard despite alert getting raised or alert emails being received?=== | ||

| + | Alerts are not visible on the Alert Dashboard mainly due to following reasons: | ||

| + | * Database connection error | ||

| + | * Alert Adapter PID file is empty/corrupt | ||

| + | * AnomalyEngine process is not running | ||

| + | |||

| + | To address the above issues, please proceed as mentioned below:<br> | ||

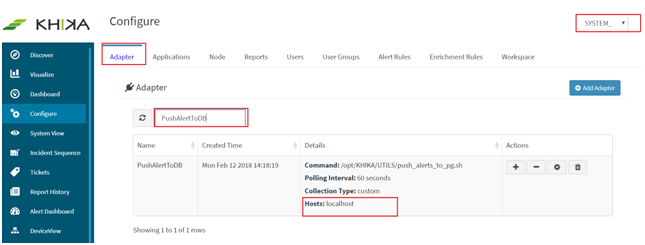

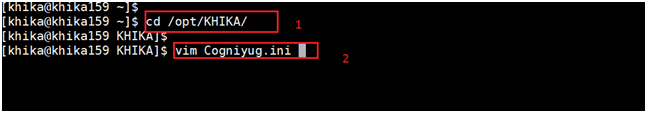

| + | '''Database connection error''' - In case of this error, the Alert Adapter fails to push the alerts into Postgres database due to connection issue caused by incorrect database configuration. This error can be fixed by correcting the database configuration on all the KHIKA nodes where alert adapter is running. To find out the list of nodes on which alert adapter is running, | ||

| + | i) Go to Configure ---> Select Adapter Tab ---> Select “SYSTEM_MANAGEMENT” Workspace. Search for “PushAlertToDB” in search bar | ||

| + | ii) Find out list of host in “Details” columns of adapter tab (see below screenshot for reference) | ||

| + | [[File:Alerts debug 1.png]]<br> | ||

| + | |||

| + | iii)Do ssh login on nodes which found in steps and open the "/opt/KHIKA/Cogniyug.ini" file. | ||

| + | [[File:Alerts debug 2.png]]<br> | ||

| + | |||

| + | iv) Verify the database configuration in the "PG_DATABASE" section and correct any necessary parameter. | ||

| + | [[File:Alerts debug 3.png]]<br> | ||

| + | |||

| + | v) Save the "/opt/KHIKA/Cogniyug.ini" file.<br> | ||

| + | |||

| + | |||