Difference between revisions of "Define your own enrichment"

| (34 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=== Introduction === | === Introduction === | ||

| − | |||

| − | |||

| − | |||

| − | + | Enrichment, as the word suggests, can be used to add context to streaming data. At its '''simplest or basic level''', you can enrich the data at run time by referring to a CSV file. Some of the examples could be as under: | |

| + | * A csv file can contain information about the inventory(such as name of computer, location, owner, service tag etc.), with name of computer as the primary key. You can use this information to enrich the windows AD logs to add more context to the login events. | ||

| + | * If you have a CSV database of IP addresses with bad reputation, where IP address is the primary key and country, city, reputation etc. are the other columns, you can very well correlate this information to streaming firewall/proxy/WAF logs to enrich any communication with bad IPs as your logs will have source and/or destination IP addresses which can be used for the lookup. | ||

| − | + | There could be several more examples of using static CSV based enrichment. You can change these CSV files dynamically (periodically or events based) and KHIKA will consume it immediately in real-time. | |

| − | * We can build IP and username database at run-time using AD logs with IP address as the primary key. Further, this database can be referred in Linux logins where AD user can be enriched as Linux logs | + | |

| + | A more '''advanced and real cool''' feature in enrichment is KHIKA's ability to build the dynamic database from a streaming data source and being able to use it in other data sources for enrichment. Using this ability, you can literally ''correlate or stitch'' the logs together from different data sources at run time, provided they have a field in common. Some of the examples could be as under: | ||

| + | * We can build IP and username database at run-time using AD logs with IP address as the primary key. Further, this database can be referred in Linux logins where Windows AD user can be enriched as Linux logs have IP address of login workstation, but not the AD username. (Linux usernames are different from AD user names) | ||

* We can extract session ID, IP address from Web logs with session ID as primary key and use it to enrich the IP address in application logs which has session ID but not IP address of the client. | * We can extract session ID, IP address from Web logs with session ID as primary key and use it to enrich the IP address in application logs which has session ID but not IP address of the client. | ||

| − | Lets us walk through an | + | Lets us walk through an example, starting with simple enrichment using a static CSV file. |

=== Example of CSV Based Enrichment === | === Example of CSV Based Enrichment === | ||

| − | Please refer to section [[Data Enrichment in KHIKA|Data Enrichment in KHIKA]] for understanding how CSV based enrichment works in KHIKA. | + | Please refer to the section [[Data Enrichment in KHIKA|Data Enrichment in KHIKA]] from User Guide for understanding how CSV based enrichment works in KHIKA. |

=== Example of Building and Referring Dynamic Enrichment === | === Example of Building and Referring Dynamic Enrichment === | ||

| + | |||

You can '''build''' any number of primary-key based tables from any streaming KHIKA data source by selecting any key as the primary key. This key can have values associated with it from any fields present in the records. This database can then be '''referred''' by any streaming KHIKA data source to match the key with any of the selected field and values from the database can be used to enrich the message when a match is found, '''in real-time.'''. This is KHIKA's ability to correlate logs across data sources in real-time. | You can '''build''' any number of primary-key based tables from any streaming KHIKA data source by selecting any key as the primary key. This key can have values associated with it from any fields present in the records. This database can then be '''referred''' by any streaming KHIKA data source to match the key with any of the selected field and values from the database can be used to enrich the message when a match is found, '''in real-time.'''. This is KHIKA's ability to correlate logs across data sources in real-time. | ||

| − | In the example below we'll '''build''' a database using Windows AD login logs. Primary key will be the IP Address of the workstation from where the login is happening and value would be username (WindowsUser). This way, we '''build''' the workstationIP to WindowsUser database. Consequently, this information will be '''referred''' in the Linux logs, wherein we will use the IP address from login message and match it with database to fetch the windows user, which will be enriched in the Linux login message. This gives us the AD user doing actual login in Linux which is a pretty useful '''log correlation'''. Sounds interesting? | + | In the example below we'll '''build''' a database using Windows AD login logs. Primary key will be the IP Address of the workstation from where the login is happening and value would be username (WindowsUser). This way, we '''build''' the workstationIP to WindowsUser database. Consequently, this information will be '''referred''' in the Linux logs, wherein we will use the IP address from login message and match it with database to fetch the windows user, which will be enriched in the Linux login message. This gives us the AD user doing actual login in Linux which is a pretty useful '''log correlation'''. Sounds interesting? Lets see how we build this in KHIKA. |

| − | + | ==== Building a dynamic database in KHIKA ==== | |

| − | |||

| − | |||

| − | + | First step is to build the dynamic database. We explain the steps below: | |

| − | |||

| − | + | 1. Login to KHIKA GUI as a customer (you must be an admin user of the customer) | |

| − | |||

| − | 5 Now on your local computer | + | 2. Click "Configure" from side menu and click "Enrichment Rules" |

| + | |||

| + | |||

| + | [[File:01Enrichment.JPG|Define Enrichment Rule|1000px]] | ||

| + | |||

| + | |||

| + | 3. Click "Manage Lookup Database". | ||

| + | |||

| + | It will show you existing databases in KHIKA. We will first create a new database and define the schema of our simple dynamic database. | ||

| + | |||

| + | |||

| + | [[File:03Enrichment.JPG|Add a Database|1000px]] | ||

| + | |||

| + | |||

| + | 4. Click "Add Lookup" to open a pop-up where we have to upload the csv file explaining the schema of the database | ||

| + | |||

| + | |||

| + | [[File:04Enrichment.JPG|Upload a file|1000px]] | ||

| + | |||

| + | |||

| + | 5. Now on your local computer create a simple csv file with just headers (and no values as values will be added dynamically from the streaming data). Let us add two columns separated by comma as shown :- | ||

tl_win_ip,tl_win_user | tl_win_ip,tl_win_user | ||

| − | Save the file with name "IP_to_User_Mapping_Lookup.csv" and close | + | Save the file with name "IP_to_User_Mapping_Lookup.csv" and close. |

| + | |||

| + | 6. On KHIKA Web GUI, Upload the file with name "IP_to_User_Mapping_Lookup.csv" | ||

| + | |||

| + | 7. The uploaded file is our new database and should be visible in the "Enrichment Lookup Database" screen. You can even search for it by typing the name. | ||

| + | |||

| + | 8. We defined the schema but our database is still empty. It is now the time to define the rule in KHIKA which will populate the database. Click "Add Rule" | ||

| + | |||

| + | |||

| + | [[File:05Enrichment.JPG|Add a rule|1000px]] | ||

| + | |||

| + | |||

| + | This will open up a form where we have to define the Rule to '''Build''' the database. | ||

| + | |||

| + | |||

| + | [[File:06Enrichment.JPG|Upload a file|1000px]] | ||

| + | |||

| + | |||

| + | * Give appropriate name to the Rule, here we have named it Windows_IP_to_User_mapping_rule. The customer name will be automatically appended. | ||

| + | * Select event class as "Windows Event Class". Note that we are going to use Windows login event 4624 to build the database | ||

| + | * Enrich Type must be set to 'Build' as we are building the database here. | ||

| + | * Order Number is set to 1. You can have an order number from 1 to 10. Lower the number, higher the priority KHIKA gives to the rule. Since this is a Build rule, we want it to be executed first and hence set the Order Number to 1. | ||

| + | * Now we want set the key column (remember, our primary key is IP Address with column header tl_win_ip). We select field network_information_source_network_address as the key field. Essentially, we are telling KHIKA to pick the value from this field and populate the database. | ||

| + | * We have to set the filter using appropriate evaluation types so that we tell KHIKA to select the exact message (i.e login message with eventid=4624) to build the database. This is explained in the screenshot below | ||

| + | |||

| + | |||

| + | [[File:07Enrichment.JPG|Upload a file|1000px]] | ||

| + | |||

| + | |||

| + | Note that tl_tag=win_ev and tl_org=name_of_customer are already pre-populated for us. We further select eventid from the drop down, set evaluation type to "is" and then enter Expression value to 4624 (the windows logon event). Note that this event (when captured on AD) has IP Address of the workstation recorded in the field network_information_source_network_address which is our primary key of the database. | ||

| + | |||

| + | We further enter some filter criteria using "not contain" clause to ensure that the unimportant events don't pollute the database. We set new_logon_account_name to not contain "$" character (note we put a double $ i.e. $$) and set network_information_source_network_address to not contain 127.0.0.1 as these records will be useless and more importantly, pollute the DB with wrong key-value pairs. It extremely important to know your data before you write these rules. | ||

| + | |||

| + | |||

| + | [[File:08Enrichment.JPG|Build Details|1000px]] | ||

| + | |||

| + | |||

| + | Finally, we click the "Build Details" tab, select Database Name as IP_to_User_Mapping_Lookup and set key-value pairs. Note that we set | ||

| + | tl_win_ip = network_information_source_network_address and | ||

| + | tl_win_user = new_logon_account_name | ||

| + | |||

| + | Click "Submit" to make this Enrichment Rule Active. | ||

| + | |||

| + | Soon after you make this rule active, KHIKA will start building the database when events matching the criteria happen on AD. Consider following login events happen on AD | ||

| + | |||

| + | 1. eventid=4624 network_information_source_network_address=192.168.1.101 new_logon_account_name=user1 | ||

| + | 2. eventid=4624 network_information_source_network_address=192.168.1.101 new_logon_account_name=xyz$ | ||

| + | 3. eventid=4624 network_information_source_network_address=192.168.1.102 new_logon_account_name=user2 | ||

| + | 4. eventid=4624 network_information_source_network_address=192.168.1.103 new_logon_account_name=user3 | ||

| + | 5. eventid=4624 network_information_source_network_address=127.0.0.1 new_logon_account_name=admin | ||

| + | 6. eventid=4624 network_information_source_network_address=192.168.1.104 new_logon_account_name=user4 | ||

| + | |||

| + | This will result into following database | ||

| + | |||

| + | tl_win_ip,tl_win_user | ||

| + | 192.168.1.101,user1 | ||

| + | 192.168.1.102,user2 | ||

| + | 192.168.1.103,user3 | ||

| + | 192.168.1.104,user4 | ||

| + | |||

| + | Note that event2 and event5 are discarded as they do not fit the event selection criteria. Event2 has $ character in the new_logon_account_name field and event5 has value 127.0.0.1 in field network_information_source_network_address. | ||

| + | |||

| + | After some time a new event appears with following details | ||

| + | |||

| + | 1. eventid=4624 network_information_source_network_address=192.168.1.101 new_logon_account_name=<span style="color:#0000ff">user4</span> | ||

| + | |||

| + | This will update the database and new database will look as shown below: | ||

| + | |||

| + | tl_win_ip,tl_win_user | ||

| + | 192.168.1.101,<span style="color:#0000ff">user4</span> | ||

| + | 192.168.1.102,user2 | ||

| + | 192.168.1.103,user3 | ||

| + | 192.168.1.104,user4 | ||

| + | |||

| + | The new record changes the older value of the primary key 192.168.1.101 with the latest value "user4" | ||

| + | |||

| + | With clear understanding of how to '''Build''' the dynamic database, we can now move on and try to use this database by '''referring''' to it | ||

| + | |||

| + | ==== Referring the dynamic Database in KHIKA==== | ||

| + | |||

| + | Let us use the IP_to_User_Mapping_Lookup dynamic database (that we already built in the previous section by using windows AD events) to correlate the Linux login messages with Windows users. The goal here is to associate a Windows AD user with Linux user as in most organisations these two users are different. Linux users are typically generic users that are difficult to associate with a real human user behind it. However, AD users typically identify the real human user. | ||

| + | |||

| + | Lets get started. | ||

| + | |||

| + | 1. Login as customer admin user, select appropriate workspace and click configure --> Enrichment Rules --> Add Rule | ||

| + | |||

| + | |||

| + | [[File:05Enrichment.JPG|Add a rule|1000px]] | ||

| + | |||

| + | |||

| + | 2. Fill in appropriate details in the form as shown below | ||

| + | |||

| + | |||

| + | [[File:09Enrichment.JPG|Add a rule|1000px]] | ||

| + | |||

| + | |||

| + | * 'Rule Name' can be any meaningful name, without white spaces. We name it as Mapping_of_win_user_to_linux. Customer name is automatically appended. | ||

| + | * Select 'Event class', Linux in this case as we wish to enrich the Linux messages. | ||

| + | * 'Enrich Type' is set to REFER (Note that we used BUILD in last section. We will use REFER now as we are using an already built database) | ||

| + | * 'Order Number' is set to 2 (Note that we used Order Number=1 in the previous section. We set it to 2 this time as we want the REFER section to execute after the BUILD section) | ||

| + | * We set the Keys to ip_address which is available in Linux event class. Basically, this is the key that KHIKA will match with the primary key of the lookup database | ||

| + | * Then we go the "Database Lookup" tab (Note that in the previous section we used the 'Build Details' tab as we were building the database.) | ||

| + | |||

| + | |||

| + | [[File:10Enrichment.JPG|Add a rule|1000px]] | ||

| + | |||

| + | |||

| + | * We select our database name, IP_to_User_Mapping_lookup from drop-down. | ||

| + | * The we select the Enrichment Columns, 'tl_win_ip' and 'tl_win_user' from the drop-down | ||

| + | * And click Submit | ||

| + | |||

| + | With this, we tell KHIKA to match the key "ip_address" from every Linux Event with the primary key of the database, which is tl_win_ip and when match is found, add tl_win_ip and tl_win_user from the database record to the Linux Event. | ||

| + | |||

| + | After making this rule active (wait for about 5 minute), do some windows logins via AD (so that the database we built sometime back starts receiving the records of windows login), followed by some Linux logins (successful or failed). We expect Windows User to shown in Linux events as an additional field 'tl_win_user'. To verify, you can search for lucene query string login_success:* AND tl_win_user:* in Linux index from the discover page. If everything is working fine, it will show following results | ||

| + | |||

| + | |||

| + | [[File:11Enrichment.JPG|Add a rule|1000px]] | ||

| + | |||

| − | + | This is how we can begin with correlating logs across data sources in KHIKA ! | |

| − | |||

| − | + | === Summary === | |

| + | * You must know what datasources you want to correlate. | ||

| + | * There must exist some common fields (such as IP, User, Session ID etc) to correlate the logs across the data sources. | ||

| + | * You should first build the database and set the primary key to the field using which we want to correlate the data with the other data source. | ||

| + | * Refer this database in the other data source (Event Class) using the primary key | ||

Latest revision as of 11:15, 13 June 2019

Contents

Introduction

Enrichment, as the word suggests, can be used to add context to streaming data. At its simplest or basic level, you can enrich the data at run time by referring to a CSV file. Some of the examples could be as under:

- A csv file can contain information about the inventory(such as name of computer, location, owner, service tag etc.), with name of computer as the primary key. You can use this information to enrich the windows AD logs to add more context to the login events.

- If you have a CSV database of IP addresses with bad reputation, where IP address is the primary key and country, city, reputation etc. are the other columns, you can very well correlate this information to streaming firewall/proxy/WAF logs to enrich any communication with bad IPs as your logs will have source and/or destination IP addresses which can be used for the lookup.

There could be several more examples of using static CSV based enrichment. You can change these CSV files dynamically (periodically or events based) and KHIKA will consume it immediately in real-time.

A more advanced and real cool feature in enrichment is KHIKA's ability to build the dynamic database from a streaming data source and being able to use it in other data sources for enrichment. Using this ability, you can literally correlate or stitch the logs together from different data sources at run time, provided they have a field in common. Some of the examples could be as under:

- We can build IP and username database at run-time using AD logs with IP address as the primary key. Further, this database can be referred in Linux logins where Windows AD user can be enriched as Linux logs have IP address of login workstation, but not the AD username. (Linux usernames are different from AD user names)

- We can extract session ID, IP address from Web logs with session ID as primary key and use it to enrich the IP address in application logs which has session ID but not IP address of the client.

Lets us walk through an example, starting with simple enrichment using a static CSV file.

Example of CSV Based Enrichment

Please refer to the section Data Enrichment in KHIKA from User Guide for understanding how CSV based enrichment works in KHIKA.

Example of Building and Referring Dynamic Enrichment

You can build any number of primary-key based tables from any streaming KHIKA data source by selecting any key as the primary key. This key can have values associated with it from any fields present in the records. This database can then be referred by any streaming KHIKA data source to match the key with any of the selected field and values from the database can be used to enrich the message when a match is found, in real-time.. This is KHIKA's ability to correlate logs across data sources in real-time.

In the example below we'll build a database using Windows AD login logs. Primary key will be the IP Address of the workstation from where the login is happening and value would be username (WindowsUser). This way, we build the workstationIP to WindowsUser database. Consequently, this information will be referred in the Linux logs, wherein we will use the IP address from login message and match it with database to fetch the windows user, which will be enriched in the Linux login message. This gives us the AD user doing actual login in Linux which is a pretty useful log correlation. Sounds interesting? Lets see how we build this in KHIKA.

Building a dynamic database in KHIKA

First step is to build the dynamic database. We explain the steps below:

1. Login to KHIKA GUI as a customer (you must be an admin user of the customer)

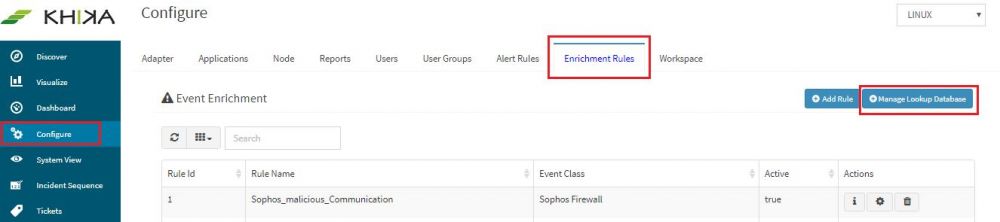

2. Click "Configure" from side menu and click "Enrichment Rules"

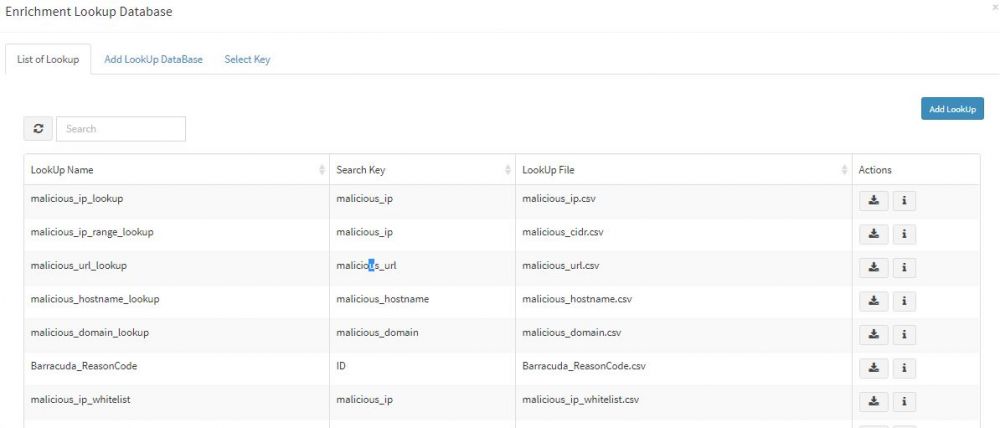

3. Click "Manage Lookup Database".

It will show you existing databases in KHIKA. We will first create a new database and define the schema of our simple dynamic database.

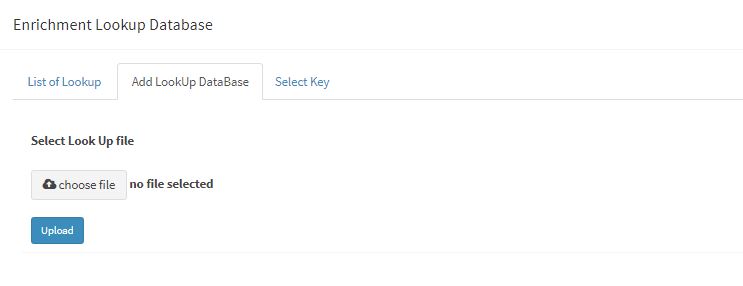

4. Click "Add Lookup" to open a pop-up where we have to upload the csv file explaining the schema of the database

5. Now on your local computer create a simple csv file with just headers (and no values as values will be added dynamically from the streaming data). Let us add two columns separated by comma as shown :-

tl_win_ip,tl_win_user

Save the file with name "IP_to_User_Mapping_Lookup.csv" and close.

6. On KHIKA Web GUI, Upload the file with name "IP_to_User_Mapping_Lookup.csv"

7. The uploaded file is our new database and should be visible in the "Enrichment Lookup Database" screen. You can even search for it by typing the name.

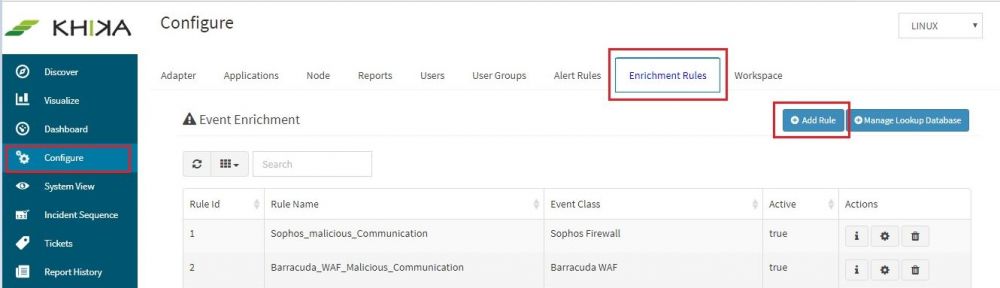

8. We defined the schema but our database is still empty. It is now the time to define the rule in KHIKA which will populate the database. Click "Add Rule"

This will open up a form where we have to define the Rule to Build the database.

- Give appropriate name to the Rule, here we have named it Windows_IP_to_User_mapping_rule. The customer name will be automatically appended.

- Select event class as "Windows Event Class". Note that we are going to use Windows login event 4624 to build the database

- Enrich Type must be set to 'Build' as we are building the database here.

- Order Number is set to 1. You can have an order number from 1 to 10. Lower the number, higher the priority KHIKA gives to the rule. Since this is a Build rule, we want it to be executed first and hence set the Order Number to 1.

- Now we want set the key column (remember, our primary key is IP Address with column header tl_win_ip). We select field network_information_source_network_address as the key field. Essentially, we are telling KHIKA to pick the value from this field and populate the database.

- We have to set the filter using appropriate evaluation types so that we tell KHIKA to select the exact message (i.e login message with eventid=4624) to build the database. This is explained in the screenshot below

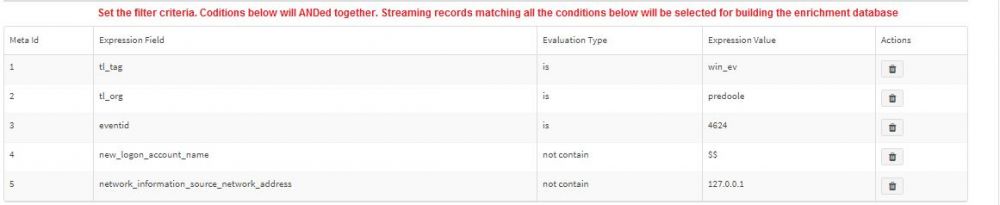

Note that tl_tag=win_ev and tl_org=name_of_customer are already pre-populated for us. We further select eventid from the drop down, set evaluation type to "is" and then enter Expression value to 4624 (the windows logon event). Note that this event (when captured on AD) has IP Address of the workstation recorded in the field network_information_source_network_address which is our primary key of the database.

We further enter some filter criteria using "not contain" clause to ensure that the unimportant events don't pollute the database. We set new_logon_account_name to not contain "$" character (note we put a double $ i.e. $$) and set network_information_source_network_address to not contain 127.0.0.1 as these records will be useless and more importantly, pollute the DB with wrong key-value pairs. It extremely important to know your data before you write these rules.

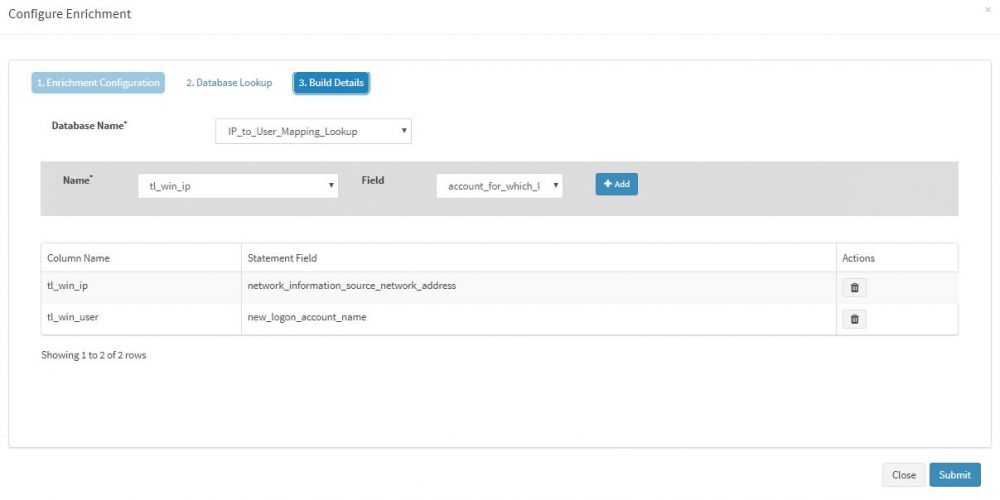

Finally, we click the "Build Details" tab, select Database Name as IP_to_User_Mapping_Lookup and set key-value pairs. Note that we set

tl_win_ip = network_information_source_network_address and tl_win_user = new_logon_account_name

Click "Submit" to make this Enrichment Rule Active.

Soon after you make this rule active, KHIKA will start building the database when events matching the criteria happen on AD. Consider following login events happen on AD

1. eventid=4624 network_information_source_network_address=192.168.1.101 new_logon_account_name=user1 2. eventid=4624 network_information_source_network_address=192.168.1.101 new_logon_account_name=xyz$ 3. eventid=4624 network_information_source_network_address=192.168.1.102 new_logon_account_name=user2 4. eventid=4624 network_information_source_network_address=192.168.1.103 new_logon_account_name=user3 5. eventid=4624 network_information_source_network_address=127.0.0.1 new_logon_account_name=admin 6. eventid=4624 network_information_source_network_address=192.168.1.104 new_logon_account_name=user4

This will result into following database

tl_win_ip,tl_win_user 192.168.1.101,user1 192.168.1.102,user2 192.168.1.103,user3 192.168.1.104,user4

Note that event2 and event5 are discarded as they do not fit the event selection criteria. Event2 has $ character in the new_logon_account_name field and event5 has value 127.0.0.1 in field network_information_source_network_address.

After some time a new event appears with following details

1. eventid=4624 network_information_source_network_address=192.168.1.101 new_logon_account_name=user4

This will update the database and new database will look as shown below:

tl_win_ip,tl_win_user

192.168.1.101,user4

192.168.1.102,user2

192.168.1.103,user3

192.168.1.104,user4

The new record changes the older value of the primary key 192.168.1.101 with the latest value "user4"

With clear understanding of how to Build the dynamic database, we can now move on and try to use this database by referring to it

Referring the dynamic Database in KHIKA

Let us use the IP_to_User_Mapping_Lookup dynamic database (that we already built in the previous section by using windows AD events) to correlate the Linux login messages with Windows users. The goal here is to associate a Windows AD user with Linux user as in most organisations these two users are different. Linux users are typically generic users that are difficult to associate with a real human user behind it. However, AD users typically identify the real human user.

Lets get started.

1. Login as customer admin user, select appropriate workspace and click configure --> Enrichment Rules --> Add Rule

2. Fill in appropriate details in the form as shown below

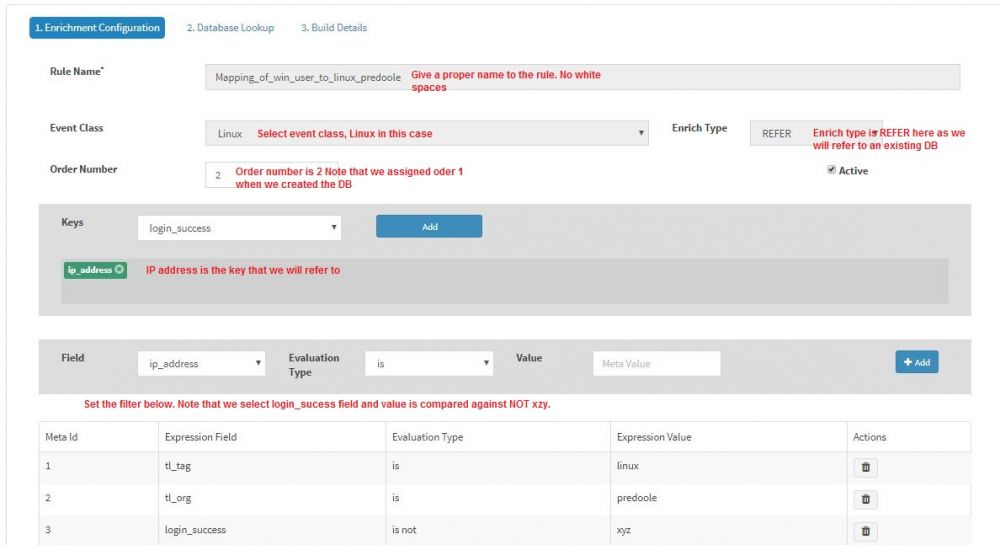

- 'Rule Name' can be any meaningful name, without white spaces. We name it as Mapping_of_win_user_to_linux. Customer name is automatically appended.

- Select 'Event class', Linux in this case as we wish to enrich the Linux messages.

- 'Enrich Type' is set to REFER (Note that we used BUILD in last section. We will use REFER now as we are using an already built database)

- 'Order Number' is set to 2 (Note that we used Order Number=1 in the previous section. We set it to 2 this time as we want the REFER section to execute after the BUILD section)

- We set the Keys to ip_address which is available in Linux event class. Basically, this is the key that KHIKA will match with the primary key of the lookup database

- Then we go the "Database Lookup" tab (Note that in the previous section we used the 'Build Details' tab as we were building the database.)

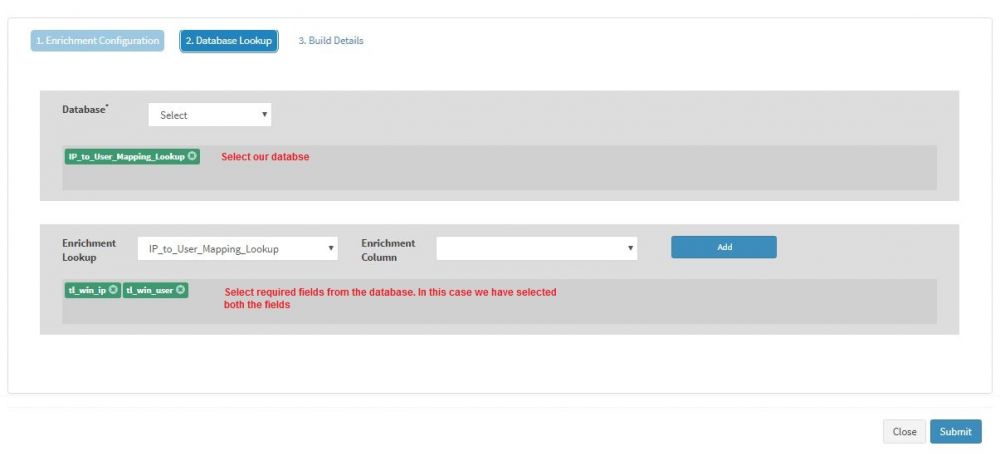

- We select our database name, IP_to_User_Mapping_lookup from drop-down.

- The we select the Enrichment Columns, 'tl_win_ip' and 'tl_win_user' from the drop-down

- And click Submit

With this, we tell KHIKA to match the key "ip_address" from every Linux Event with the primary key of the database, which is tl_win_ip and when match is found, add tl_win_ip and tl_win_user from the database record to the Linux Event.

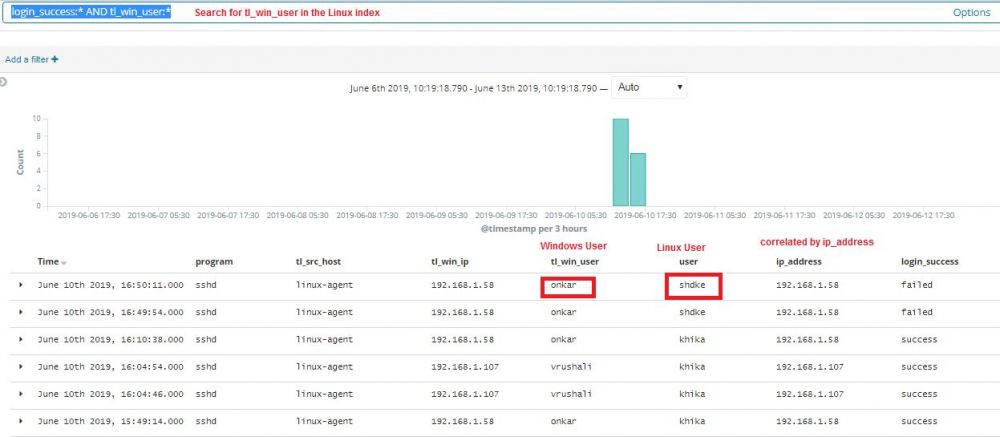

After making this rule active (wait for about 5 minute), do some windows logins via AD (so that the database we built sometime back starts receiving the records of windows login), followed by some Linux logins (successful or failed). We expect Windows User to shown in Linux events as an additional field 'tl_win_user'. To verify, you can search for lucene query string login_success:* AND tl_win_user:* in Linux index from the discover page. If everything is working fine, it will show following results

This is how we can begin with correlating logs across data sources in KHIKA !

Summary

- You must know what datasources you want to correlate.

- There must exist some common fields (such as IP, User, Session ID etc) to correlate the logs across the data sources.

- You should first build the database and set the primary key to the field using which we want to correlate the data with the other data source.

- Refer this database in the other data source (Event Class) using the primary key