Difference between revisions of "Data Archival in KHIKA"

Amit sharma (talk | contribs) (→Overview) |

Amit sharma (talk | contribs) |

||

| Line 5: | Line 5: | ||

*'''Online storage''' – The data that needs to readily searchable via KHIKA UI is refered as Online Data. The number of days for which data is maintained in online data storage is controlled via the Workspace level parameter called “TIME-TO-LIVE” or TTL which is configurable as per customer requirements. | *'''Online storage''' – The data that needs to readily searchable via KHIKA UI is refered as Online Data. The number of days for which data is maintained in online data storage is controlled via the Workspace level parameter called “TIME-TO-LIVE” or TTL which is configurable as per customer requirements. | ||

*'''Offline storage''' - The data older than the Time-To-Live period (which is not searchable via KHIKA UI) and needs to be retained for compliance or for long term retention is referred as the offline data. | *'''Offline storage''' - The data older than the Time-To-Live period (which is not searchable via KHIKA UI) and needs to be retained for compliance or for long term retention is referred as the offline data. | ||

| + | |||

| + | == Data Archival Workflow == | ||

| + | On receiving log data from data sources, KHIKA indexes and stores the data as online data. The online data is stored on a high performance storage device (viz. SSD, 15K RPM Disk)and the number of days for which online data needs to be retained is controlled via the Workspace level parameter called “TIME-TO-LIVE” or TTL which can be configured as per customer requirements. The default TTL or online data retention period for a workspace is 90 days. | ||

| + | |||

| + | As the log data is received and stored in KHIKA, the Data Archival workflow automatically manages the life cycle of data by moving the data from online storage to offline storage based on the data retention period. Let us consider the below example to understand the Data Archival workflow which refers a workspace named "Linux Servers" with its retention period set to 30 days. | ||

| + | |||

| + | * Log data from 1st of March is stored in KHIKA for this workspace. This data would be stored in online data storage. | ||

| + | * On 31st March, the 30 day retention period for data of 1st March has elapsed. | ||

| + | * The Data Archival workflow will take a snapshot of data index for 1st March from elasticsearch. The snapshot is compressed and copied it to the offline data storage for archival. | ||

| + | * The workflow computes checksum for the stored snapshot and maintains it in the KHIKA database - the checksum is used to verify the integrity of archived data. | ||

| + | * The data index for 1st March is then removed from elasticsearch. | ||

| + | * A similar process is executed on 1st April for archiving data of day 2nd March. | ||

== Checking Data Archival details == | == Checking Data Archival details == | ||

Revision as of 09:32, 14 June 2019

Contents

Overview

The purpose of this section is to provide KHIKA Administrators and Users, an understanding of the complete life cycle of data stored in KHIKA. In KHIKA, time series log data from data sources is segregated into one or more workspaces such that data from a distinct data source is typically stored in each workspace’s index. On receiving log data, KHIKA identifies the workspace associated with the data source and stores the data in its corresponding workspace's daily index. In other words, the data received today will be stored in today’s data index while the data received tomorrow will be stored in tomorrow’s data index.

Since log data combined over a period of time tends to becomes large (> few TBs) in size, in-order to maintain optimal application performance as well as to ensure prudent use of IT infrastructure and resources, KHIKA data is categorized into the following two types:

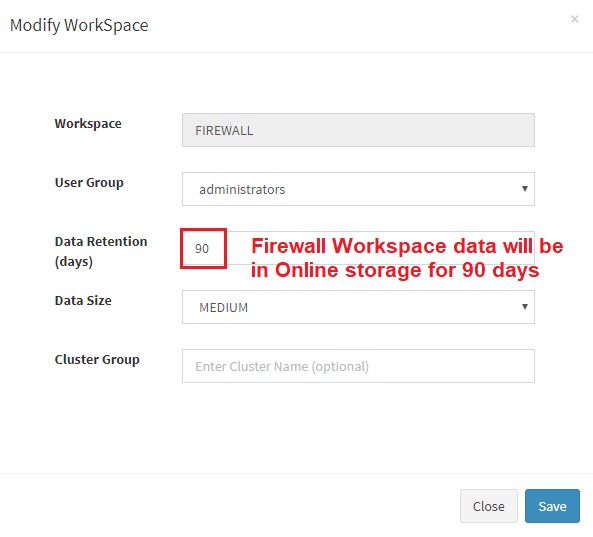

- Online storage – The data that needs to readily searchable via KHIKA UI is refered as Online Data. The number of days for which data is maintained in online data storage is controlled via the Workspace level parameter called “TIME-TO-LIVE” or TTL which is configurable as per customer requirements.

- Offline storage - The data older than the Time-To-Live period (which is not searchable via KHIKA UI) and needs to be retained for compliance or for long term retention is referred as the offline data.

Data Archival Workflow

On receiving log data from data sources, KHIKA indexes and stores the data as online data. The online data is stored on a high performance storage device (viz. SSD, 15K RPM Disk)and the number of days for which online data needs to be retained is controlled via the Workspace level parameter called “TIME-TO-LIVE” or TTL which can be configured as per customer requirements. The default TTL or online data retention period for a workspace is 90 days.

As the log data is received and stored in KHIKA, the Data Archival workflow automatically manages the life cycle of data by moving the data from online storage to offline storage based on the data retention period. Let us consider the below example to understand the Data Archival workflow which refers a workspace named "Linux Servers" with its retention period set to 30 days.

- Log data from 1st of March is stored in KHIKA for this workspace. This data would be stored in online data storage.

- On 31st March, the 30 day retention period for data of 1st March has elapsed.

- The Data Archival workflow will take a snapshot of data index for 1st March from elasticsearch. The snapshot is compressed and copied it to the offline data storage for archival.

- The workflow computes checksum for the stored snapshot and maintains it in the KHIKA database - the checksum is used to verify the integrity of archived data.

- The data index for 1st March is then removed from elasticsearch.

- A similar process is executed on 1st April for archiving data of day 2nd March.

Checking Data Archival details

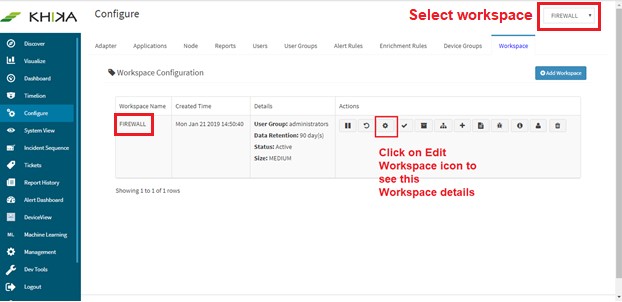

Go to Configure from the left pane and select Workspace tab.

KHIKA Data Archival procedure automatically moves data in this workspace, only when it is 91 days old, to the Offline storage. Newer data in the workspace is not moved until 90 days. As part of the data archival process, prior to moving the older data to offline storage, the older data is compressed and its checksum is computed. KHIKA maintains the checksums for each archived data directory and uses it to verify the integrity of the archived data.

Note : If the online storage disk utilisation reaches 80%, ie. If it is 80% full, then, oldest day data shall be moved to the Offline storage even if it is not 91 days old yet.

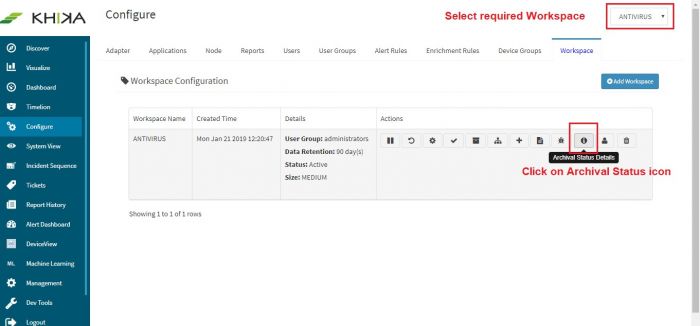

To review the Data archival status for a workspace, go to Configure from the main KHIKA menu and select Workspace tab.

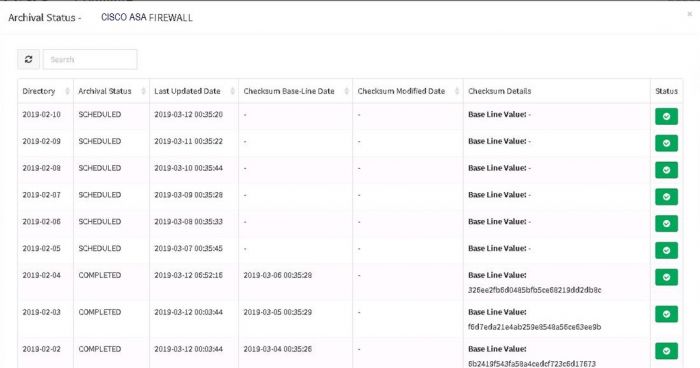

Select the required workspace from the dropdown and click on Archival status icon for it. A pop up appears asking for from and to dates for duration of archival report. Select dates and you can get the archival status report as follows:

The archival status report shows the status of the archival tasks for individual day-wise data directories. Please note that the report also shows the checksum values for archived data directories.

Offline storage

If you have implemented KHIKA on premise

You have to provide storage, for data to move out from online to offline storage after retention period expires.

As explained in above section, there is a "Data Retention" value, in days for every workspace. The oldest day's data in KHIKA for this workspace, which is one day older than the retention period value, has to be moved to another secondary storage called the "Offline Storage". For example, if retention period in your LINUX workspace say, is 30 days. Lets say you have data beginning from 1st of March in this workspace. On 31st March, the data of 1st March has crossed its retention period. A snapshot is taken of elastic data index for 1st March and it is copied to the offline storage.

Once data moves to Offline storage, it is not searchable on the Discover screen. However it can be recovered to Online storage as needed. If you require some older data, specific day's index can be moved back to online storage for any investigative purposes.

For larger sizes of data, you may want to check daily size of data in KHIKA and then estimate how much time you want to retain and what will be the disk size required. It may also depend on any compliance requirements in your organisation.

The Linux server administrator in your environment has to mount a disk partition for offline storage in the KHIKA App server. This is typically larger in size than online disk space and can hold your data upto a year or more. In case of offline disk space too, getting filled up, there are 2 options:

- Increase offline disk space

- Move oldest data manually to another long term storage device as and when required. (Not done by KHIKA automatically)

If you have implemented KHIKA as SaaS

Online storage

- There is a maximum storage of 3GB data per day online storage in KHIKA.

- This shall include data from multiple devices and stored in multiple workspaces.

- This shall be retained in KHIKA for 3 days.

- Any data shall be discarded on its 4th day.

For additional Online storage and retention please contact our sales team on info@khika.com

Offline storage

For any offline storage related queries, please contact our sales team on info@khika.com

Archival Process

An automatic scheduled Archival process in KHIKA, moves appropriately old data from online to offline storage automatically each day. This follows the "Data Retention" value in each of your workspaces.

This process runs only when offline disk is mounted in KHIKA server.

go to the next section to know more about the anti data breach feature in KHIKA - File Integrity Monitoring